2024-9-8 21:58:58 Author: hackernoon.com(查看原文) 阅读量:3 收藏

Authors:

(1) Zhan Ling, UC San Diego and equal contribution;

(2) Yunhao Fang, UC San Diego and equal contribution;

(3) Xuanlin Li, UC San Diego;

(4) Zhiao Huang, UC San Diego;

(5) Mingu Lee, Qualcomm AI Research and Qualcomm AI Research

(6) Roland Memisevic, Qualcomm AI Research;

(7) Hao Su, UC San Diego.

Table of Links

Motivation and Problem Formulation

Deductively Verifiable Chain-of-Thought Reasoning

Conclusion, Acknowledgements and References

A Deductive Verification with Vicuna Models

C More Details on Answer Extraction

E More Deductive Verification Examples

4 Deductively Verifiable Chain-of-Thought Reasoning

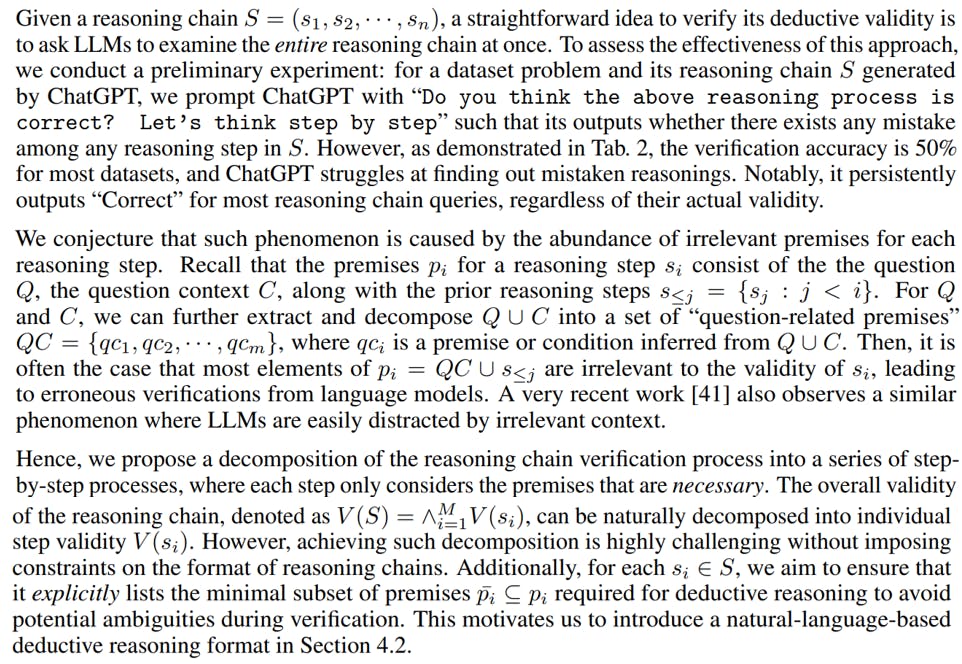

In this section, we introduce our specific approaches to performing deductive verification of reasoning chains. Specifically, we first introduce our motivation and method for decomposing a deductive verification process into a series of step-by-step processes, each only receiving contexts and premises that are necessary. Then, we propose Natural Program, a natural language-based deductive reasoning format, to facilitate local step-by-step verification. Finally, we show that by integrating deductive verification with unanimity-plurality voting, we can improve the trustworthiness of reasoning processes along with final answers. An overview of our approach is illustrated in Fig. 1 and Fig. 2.

4.1 Decomposition of Deductive Verification Process

4.2 Natural Program Deductive Reasoning Format

As previously mentioned in Sec. 4.1, we desire LLMs to output deductive reasoning processes that can be easily verified by themselves, specifically by listing out the minimal set of necessary premises pi at each reasoning step si . To accomplish its goal, we propose to leverage the power of natural language, which is capable of rigorously representing a large variety of reasoning processes and can be generated with minimal effort. In particular, we introduce Natural Program, a novel deductive reasoning format for LLMs. More formally, Natural Program consists of the following components:

• An instruction for models to extract question-related premises QC. We use the following instruction: “First, let’s write down all the statements and relationships in the question with labels".

• A numbered-list of question-related premises, each prefixed with “#{premise_number}”.

• An instruction for models to generate the reasoning chain S based on the question-related premises QC. We use the following instruction: “Next, let’s answer the question step by step with reference to the question and reasoning process”.

To encourage language models to reason in the Natural Program format, we have designed one-shot prompts for different datasets, which are shown Appendix D.2. Given that LLM’s reasoning outputs follow the Natural Program format, we can then verify the deductive validity of a single reasoning step si through an instruction that consists of (1) the full descriptions of premises used for the reasoning of si ; (2) the full description of si ; (3) an instruction for validity verification, such as “Double-check the reasoning process, let’s analyze its correctness, and end with "yes" or "no".” Note that throughout this verification process, we only retain the minimal necessary premise and context for si , thereby avoiding irrelevant context distraction and significantly improving the effectiveness of validation. Additionally, we employ a one-shot prompt for this verification process, which we find very helpful for improving the verification accuracy. The prompt is shown in Appendix D.3.

Figure 1 provides an overview of the complete Natural Program-based deductive reasoning and verification process. By using the Natural Program approach, we demonstrate that LLMs are capable of performing explicit, rigorous, and coherent deductive reasoning. Furthermore, Natural Program enables LLMs to self-verify their reasoning processes more effectively, enhancing the reliability and trustworthiness of the generated responses.

4.3 Integrating Deductive Verification with Unanimity-Plurality Voting

Given that we can effectively verify a deductive reasoning process, we can naturally integrate verification with LLM’s sequence generation strategies to enhance the trustworthiness of both the intermediate reasoning steps and the final answers. In this work, we propose Unanimity-Plurality Voting, a 2-phase sequence generation strategy described as follows. Firstly, similar to prior work like [48], we sample k reasoning chain candidates along with their final answers. In the unanimity phase, we perform deductive validation on each reasoning chain. Recall that a chain S is valid (i.e., V (S) = 1) if and only if all of its intermediate reasoning steps are valid (i.e., ∀i, V (si) = 1). For each intermediate reasoning step si , we perform majority voting over k ′ sampled single-step validity predictions to determine its final validity V (si). We then only retain the verified chain candidates {S : V (S) = 1}. In the plurality voting stage, we conduct a majority-based voting among the verified chain candidates to determine the final answer. This voting process ensures that the final answer is selected based on a consensus among the trustworthy reasoning chains.

如有侵权请联系:admin#unsafe.sh