2024-9-20 02:42:56 Author: securityboulevard.com(查看原文) 阅读量:2 收藏

A New Threat Vector Emerges

Consider this perspective: You’re adept at navigating the rapidly evolving threat landscape, because you’re experienced. Your company stands as one of the most targeted enterprises by bad actors globally, and that means you’ve just about seen it all. Accustomed to the pace, variety and unpredictability of digital attacks, you maintain confidence in your robust security stack, strategy and world-class team.

But what happens when a novel threat vector emerges?

LLM platform abuse is a fraud trend fueled by the explosive popularity of generative AI-powered platforms and LLM assistants, and poses a unique challenge for security professionals. As this new threat vector gains traction, it creates widespread implications for security postures across the global business landscape, like:

- the harmful use of generative AI technologies in impersonation and spear phishing attacks

- a parallel ecosystem of generative AI based services bypassing geo constraints and powered by malicious LLM proxies

- data theft from domain specific language models via prompt scraping

Exploiting AI Infrastructure

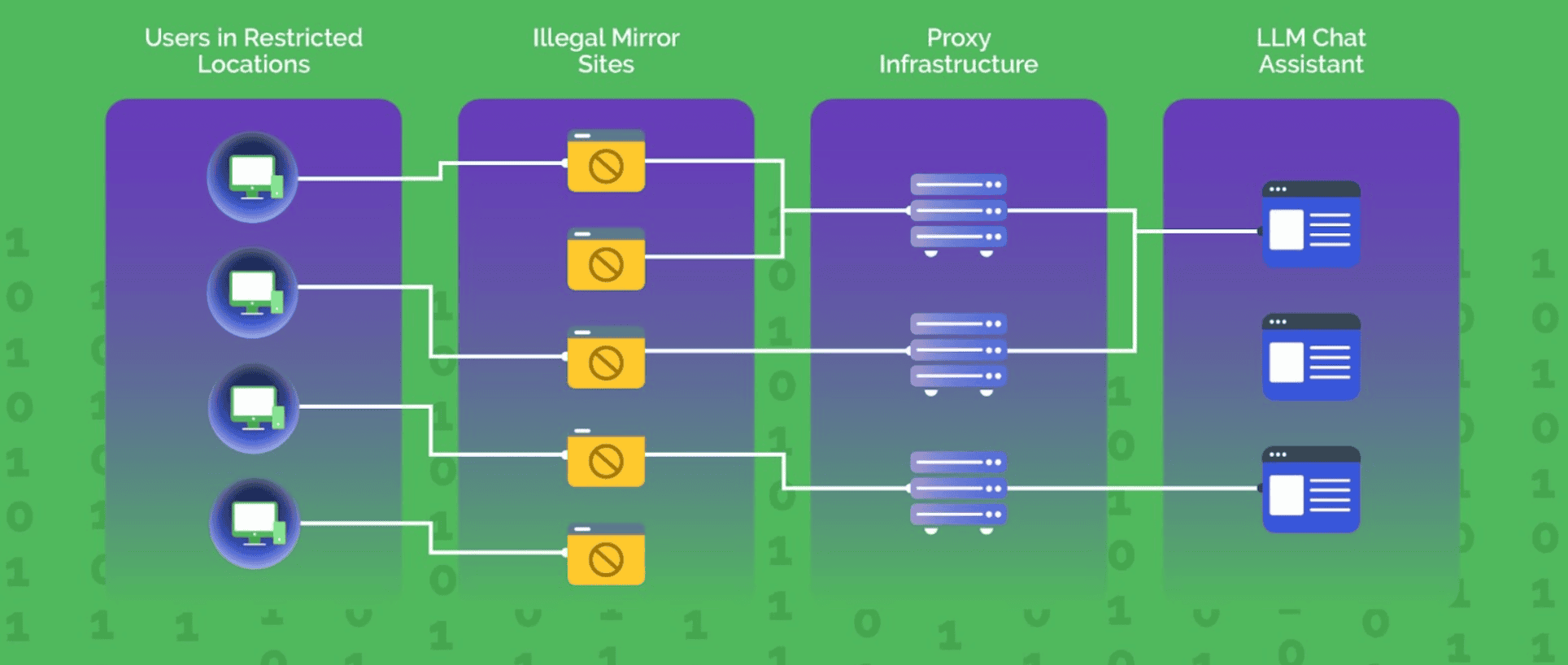

As the popularity of large language models (LLMs) grows, so does the creativity of cybercriminals. Malicious actors are increasingly deploying illegal reverse proxy services across various countries. These services are primarily used to bypass tracking and geo-restrictions imposed by nation-states, or constraints set by LLM providers. Moreover, these proxies facilitate activities like generating phishing emails and creating deepfake content, leaving no trace and complicating efforts to track their operations. When this threat emerged, it was used to attack one leading platform, and now we’re seeing this vector across all of the leading LLM platforms.

It’s Getting Real

This is a bifurcated ecosystem, composed of two types of fraudulent players. The first type seeks to bypass LLM restrictions, scraping prompts to build their own LLM-like platform, which will be offered for illegal services. The second type buys and uses those services to perform other malicious activities and attacks.

Let’s start by looking at an example of how the first type operates. Recently, a large-scale, complex custom proxy stack was targeting multiple AI assistants. The stack was sophisticated, well-organized and orchestrated, and set up across multiple countries. The network of malicious actors behind this platform is decentralized, with various operators developing and hosting different components of the platform that are required to execute the kill chain. At the end of the day, anyone can host anything, and that type of setup makes it difficult to detect and take down the attackers.

A group the Arkose Cyber Threat Intelligence Research (ACTIR) unit dubbed Astute Opal is an example. They used scraping to create a commercial operation where the attackers were making money, and lots of it, off the backs of LLM assistants. The stack was deployed on multiple data centers across the globe. Imagine facing a novel attack like this, which is somewhat hidden from clear view, at the same time you’re getting hit with other volumetric threats.

The efforts of Astute Opal attracted the attention of other attackers, who represent the other type. These players tend either to build other downstream illegal services or simply leverage the stack for attack purposes, such as creating phishing emails.

This was a lucrative “business” model for both types, until the AI assistants implemented Arkose GPT Protection.

Chart 1: Anatomy of LLM Platform Abuse

Sudden Traffic Spike and Response

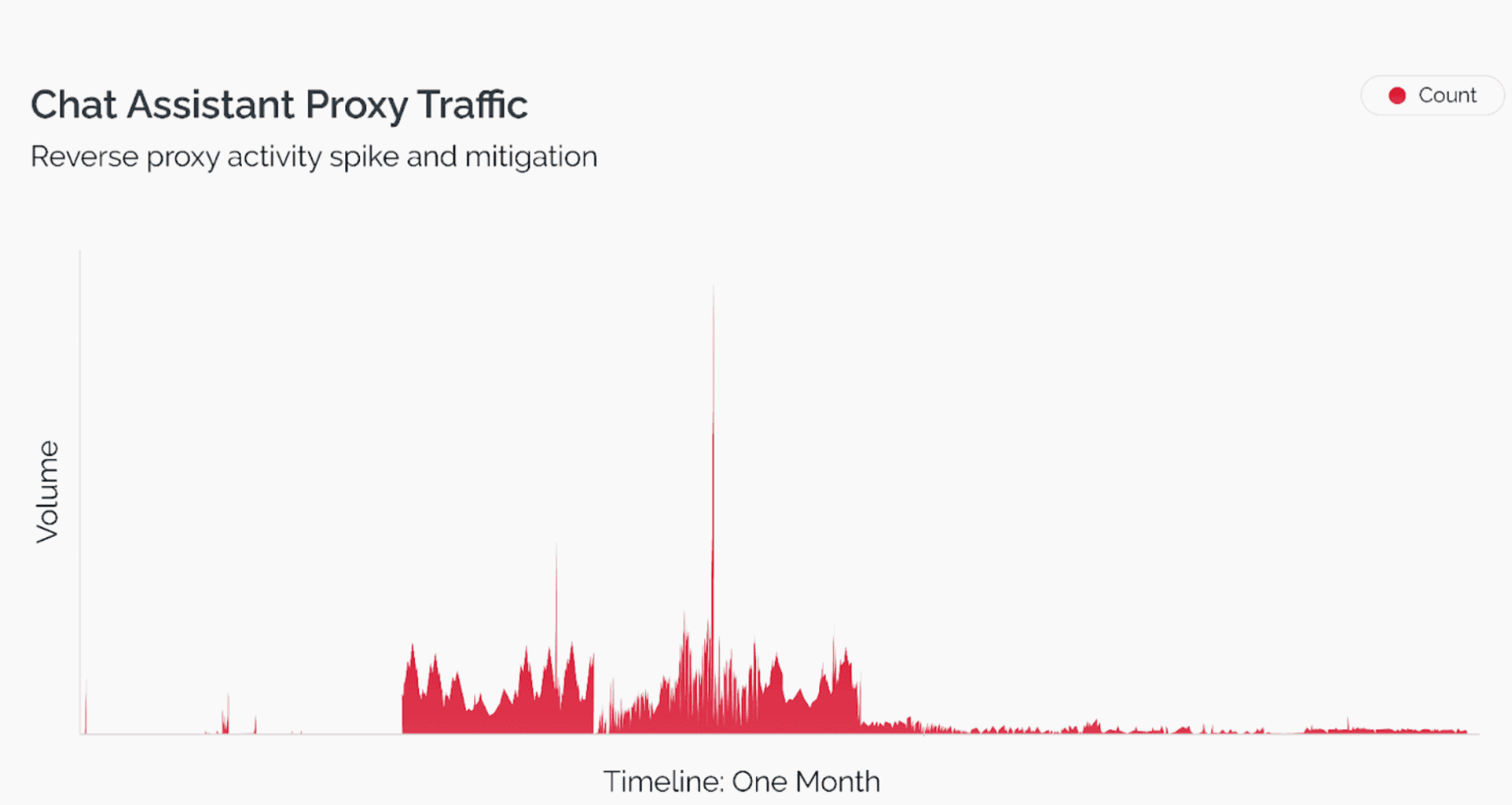

A recent incident involving one of our clients here at Arkose Labs illustrates the severity of this issue. Our threat research unit, ACTIR, observed a sudden spike in traffic originating from these nefarious proxies. The graph below shows a sharp increase in requests (indicated by the red line), which subsequently dropped following the implementation of our mitigation strategies. This pattern underscores the effectiveness of proactive defense measures against these sophisticated LLM platform abuse threats.

Specialized Proxy Defense

Addressing LLM platform abuse requires more than generic solutions. The first-of-its-kind Arkose GPT Protection capability is built into our patented platform Arkose Bot Manager. Arkose GPT Protection is designed and already battle-proven to detect and stop malicious traffic from these reverse proxies. This targeted approach not only identifies the abusive infrastructure but also prevents its operation, effectively neutralizing the threat. We’re in a unique position to provide the security community with immediate insights and intel on these novel attacks, because we work with two of the leading AI assistant platforms.

Final Note

Imagine what it would be like if you could stay so far ahead of emerging threat vectors that the threat was stopped before it ever made impact. That can be done by adopting advanced, specific solutions designed to tackle emerging problems like LLM platform abuse. By understanding and addressing the nuances of these attacks, we can protect against the misuse of technology and maintain trust in the digital advancements that drive society forward.

Want to know more about protecting your business from LLM platform abuse? Visit our Arkose Labs LLM platform abuse page to learn how to stay ahead of emerging AI-driven threats.

*** This is a Security Bloggers Network syndicated blog from Arkose Labs authored by Vikas Shetty. Read the original post at: https://www.arkoselabs.com/blog/rise-llm-platform-abuse

如有侵权请联系:admin#unsafe.sh