2024-9-24 06:0:0 Author: blog.quarkslab.com(查看原文) 阅读量:4 收藏

In this blog post we present crypto-condor, an open-source test suite for compliance testing of implementations of cryptographic primitives.

Introduction

If that sentence seems like a random amalgamation of words, let me try to explain.

One of our domains of expertise here at Quarkslab is cryptography. We regularly audit products that involve cryptography in different capacities, ranging from "we use SHA-256 at least once" to "we are designing a novel cryptographic protocol and we want someone to audit it". This means that audits can be wildly different, but when examining a product that includes implementations of established algorithms, there are common compliance tests that we perform routinely; the type of tests we'd like to automate as much as possible.

Hence, one of the internship offers of the 2022-2023 season aimed to develop a

"test suite for cryptographic primitives"1. The goal was to

automate that compliance part of our audit workflow, such as using test vectors

to ensure the correctness of cryptographic components or verifying the

randomness of random number generators, along with writing an internal guide on

post-quantum standardization. During this 6-month internship I developed a tool

called crypto-condor. If the name sounds familiar, you may have seen my

presentations at SSTIC or Pass the SALT. :)

Let's dive into what it does!

Testing the correctness

First, we need to define some terms. Namely, what are cryptographic primitives and what it means to test the compliance of implementations of these primitives.

Cryptographic primitives are the low-level algorithms that are used to build all sorts of protocols: encryption, key establishment, message authentication, multi-party computation, and so on. For example, AES and ChaCha20 are two symmetric encryption primitives, which are used in TLS 1.2+ to secure connections to websites among other uses. Depending on the product, these primitives can be provided by a well-known library such as OpenSSL, or by a homemade implementation. Using a library usually requires us to check if the usage is correct, but without auditing said library in the process. On the other hand, a homemade implementation requires more attention: the general rule of thumb in cryptography is "don't roll your own crypto", which means to not implement known primitives yourself or invent new ones unless you really need to. This is due to the fact that getting cryptographic implementations right can be very tricky, and the consequences of mistakes can be dire depending on its application.

But then, what happens when we do have to audit a homemade implementation? The first step is to verify the correctness of the implementation. We want to ensure that the implementation complies to the specification: a document detailing the algorithm, with its possible input and output values, the operations performed, and maybe even some implementation recommendations. For example, FIPS 180-4 describes the SHA-2 family of hash functions, down to the constants used in their operations.

One pretty cheap but reasonable way of testing this compliance is with test vectors. Most of these algorithms are deterministic, which means that when we give one the same set of inputs twice, we will obtain the same exact result both times (we will talk about what happens for non-deterministic primitives). So usually the specification already includes a handful inputs, their intermediate values throughout the algorithm, and the resulting outputs. These are the test vectors, intended to help developers test their implementation.

Let's say we get an implementation of SHA-256 where the constants have been swapped by those used in SHA-512. When we try running that implementation using the SHA-256 test vectors, we'll quickly notice that the results don't match for any of the available vectors, indicating that the implementation is not compliant. And so what if it isn't? Turns out, we have another post discussing just that.

What does crypto-condor do?

With these concepts in mind, let's check the goals of the internship:

- We wanted to automate the compliance part of our cryptography audit process.

- This includes using test vectors to ensure the correctness of implementations.

- We also wanted to be able to test the output of uniform random number generators (RNGs).

- Parallel to this, another objective was to write a guide of the post-quantum cryptography standardization.

All these requirements can fit inside a single tool. We can wrap the test

vectors in a nice Python interface, add a library for testing the output of

RNGs, and include the guide in the project's documentation. That is

crypto-condor in a nutshell.

crypto-condor's logo

The guides

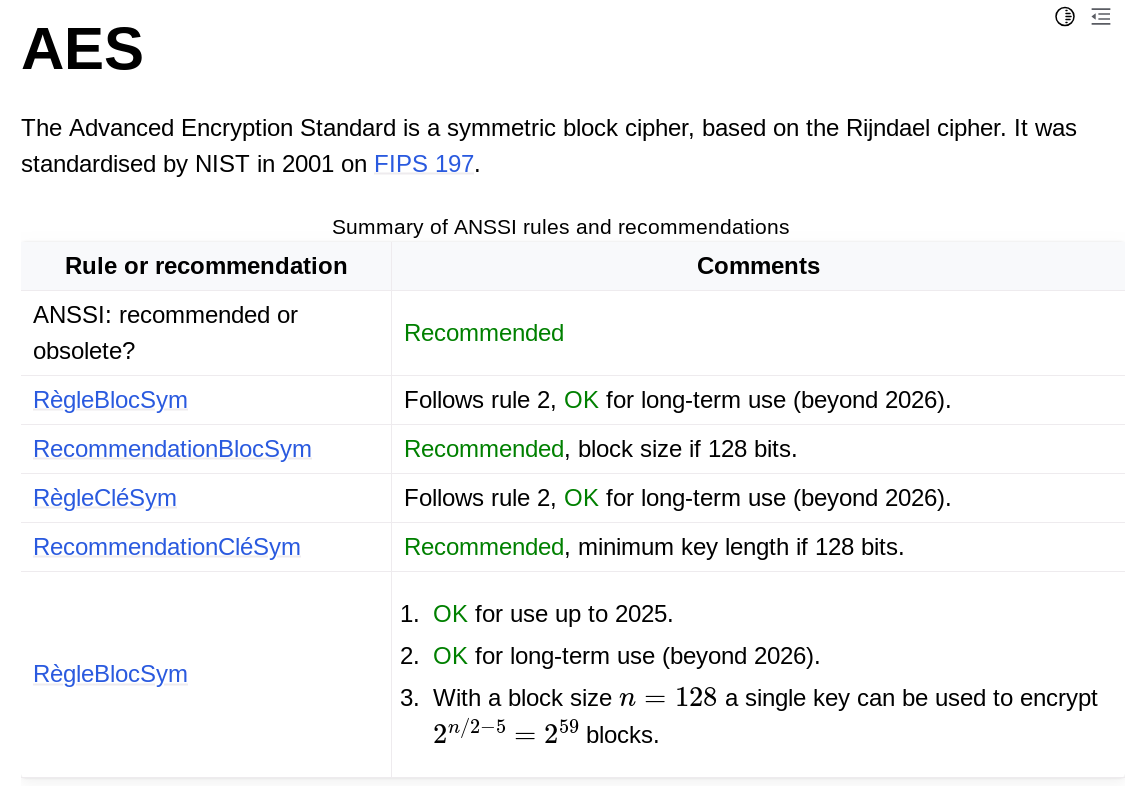

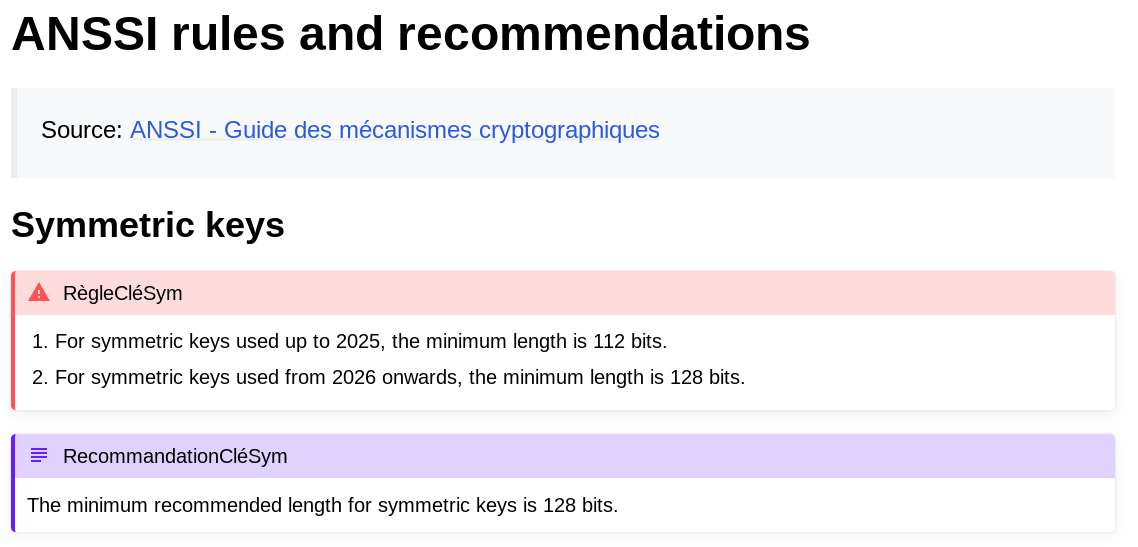

During audits, we use ANSSI's guidelines on cryptographic primitives2. They cover a variety of categories, sometimes in a very generic way, so we wanted to compile all the relevant rules and recommendations from these documents for each primitive supported. This turned into our method guides.

These guides provide an overview of the primitive with some key information,

such as its parameters, modes of operation, compatible elliptic curves, and so

on, along with a brief description of the algorithm. This overview is then

followed by a list of the rules and recommendations that concern the primitive.

They have been translated to English as that's the language used in

crypto-condor.

The AES method guide

The rules and recommendations for AES

Regarding the post-quantum primitives selected after the third round of NIST's Post-Quantum Cryptography Standardization, the goal was to go into greater detail to get us up-to-date with these new algorithms. As such, those guides also include benchmarks, links to implementations and published attacks, and a bibliography, besides the overview of the primitive.

The guides are available on the

documentation.

For offline users, the Markdown sources are available through the CLI's method

command:

$ crypto-condor-cli method AES

Saved AES guide to AES.md

$ cat AES.md

# AES

{{ prolog }}

The Advanced Encryption Standard is a symmetric block cipher, based on the

Rijndael cipher. It was standardised by NIST in 2001 on [FIPS 197][FIPS197].

[-- snip --]

Test vectors

At the core of this tool are the test vectors. The main source we use is NIST's Cryptographic Algorithm Validation Program, which provides vectors for the algorithms recommended by the NIST. They come in a plaintext format of key/value pairs, which means they have to be parsed. We have written simple parsers for the different primitives, and the results are stored using protobufs, allowing us to serialize them and later load them back as Python classes. This is done directly in the CI when packaging the tool.

Another important source of test vectors is Project

Wycheproof, a project to test "crypto

libraries against known attacks". These attacks are provided in the form of test

vectors, which were specially crafted to detect some vulnerabilities. For

example, an ECDSA implementation should not accept the signature (0,0) for any

message, lest they fall for psychic

signatures.

This can be tested by creating a valid ECDSA key and a random message, and

testing whether the implementation accepts the signature (0,0) as valid for

the given message and public key. If it does, it is vulnerable.

Interestingly, as a side effect, we can test the resilience of an implementation by creating a corpus of test vectors based on real implementation errors and vulnerabilities observed.

Architecture

crypto-condor's architecture

The protobuf classes are exposed to the rest of crypto-condor through data

classes, which define a load method allowing users to pick different sets of

vectors depending on parameters related to the primitive. For example, AES

vectors are separated by the different modes of operation (e.g. CBC, GCM), and

also by the key length used in the vectors.

These parameters are often exposed to the end users, so they are represented by enums, which allow us to define the set of possible values, and can be used for auto-completion by IDEs and the CLI.

crypto-condor has a modular architecture, meaning that each primitive has its

own independent module under crypto_condor.primitives, as we wanted to make it

easy to add new primitives over time while modifying the least amount of

existing code. These modules include both the test vector classes, as well as

the test functions that are available for end users. They expect Python

functions or classes with a given prototype, in order for crypto-condor to run

the test vectors on them. We use protocols to define the expected prototype,

which are displayed on the documentation, and also allow us and users to check

whether the prototype is respected by the function with a type-checker.

Testing functions

The first way to test implementations is directly through the Python API, by using the test functions exposed by the modules. These functions test operations of a given primitive, such as encrypting or decrypting with AES, or creating MACs with HMAC.

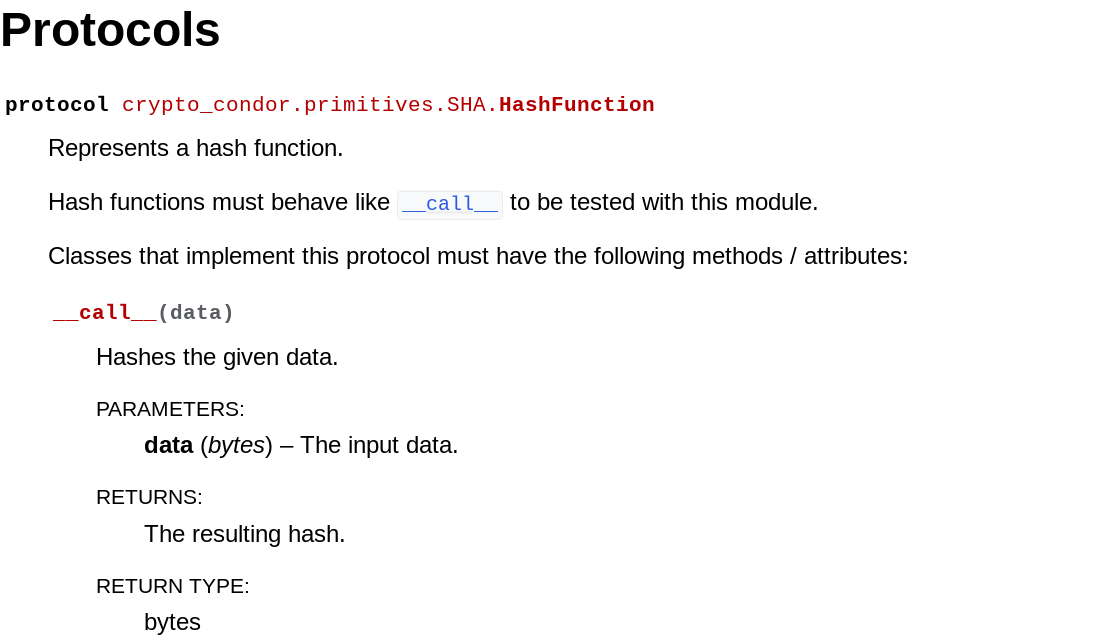

For example, let's test hashlib's implementation of SHA-256. First, we head to

the documentation to check the expected function signature. In this case, we

must conform to the HashFunction protocol:

The HashFunction protocol

Next, since hashlib does not behave like the corresponding protocol, we want

to wrap it in a function of our own, and finally pass it to the SHA module:

# Import the implementation to test.

from hashlib import sha256

# Import the primitive module from crypto-condor.

from crypto_condor.primitives import SHA

# Define our function that behaves like the protocol.

def my_sha256(data: bytes) -> bytes:

return sha256(data).digest()

# Pick an algorithm to test.

algo = SHA.Algorithm.SHA_256 # <-- enum

# Run the test and display the results.

results = SHA.test(my_sha256, algo)

print(results)

This results in the following output, which indicates that the implementation is compliant:

$ python test_sha_256.py

Valid tests:

Passed: 130

Failed: 0

Testing wrappers

crypto-condor also comes with a CLI, named crypto-condor-cli, which is

installed along the package. In order to test implementations through the CLI,

we do have to rely a bit on some scripting. Part of it is simplified through

what we call wrappers: small template scripts in Python (or sometimes in C)

that already provide the expected prototype of the primitive, and are primarily

used through the CLI.

To get a wrapper, we use the get-wrapper command. Sticking with the SHA-256

example, let's get one for the SHA primitive.

$ crypto-condor-cli get-wrapper SHA --language Python

Copied sha_wrapper.py

[-- snip --]

We obtain the following template:

"""Wrapper template for SHA implementations."""

import hashlib

def sha(data: bytes) -> bytes:

"""Wrapper function for a SHA implementation.

Args:

data: The input data.

Returns:

The digest of the data.

"""

pass

Similarly to the previous section, we fill this function template with a call to the implementation we want to test.

import hashlib

def sha(data: bytes) -> bytes:

# [-- snip --]

return hashlib.sha256(data).digest()

Then we can run the tests with the CLI. This time, we pass the parameters we want to test through options3:

$ crypto-condor-cli test wrapper SHA sha_wrapper.py SHA-256

╭───────────────────────────── Results summary ─────────────────────────────╮

│ Primitives tested: SHA │

│ Valid tests: │

│ Passed: 130 │

│ Failed: 0 │

╰────────────────── crypto-condor 2024.08.23 by Quarkslab ──────────────────╯

Testing harnesses

Along with this blog post, we are releasing a new feature for testing shared libraries. The idea comes from fuzzing harnesses, where the library or executable is modified to expose a function that a fuzzer can use. In our case, the library is expected to expose functions that wrap the primitives to test.

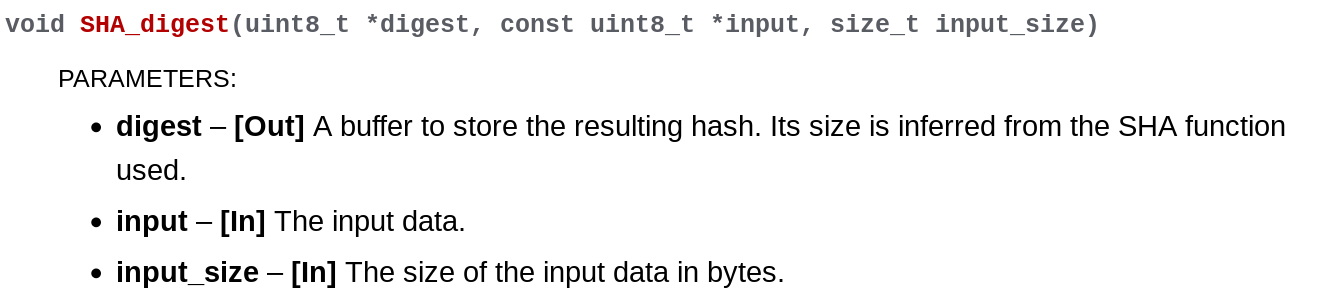

To illustrate this, let's assume we have the source code of SHA-256

implementation in C. Following the documentation, we have to create a function

called CC_SHA_256_digest with this function signature:

The signature for a SHA harness

So we get:

// The implementation to test.

void sha256_digest(const char *input, size_t size, char *output) {

// ...

}

void CC_SHA_256_digest(uint8_t *digest, const uint8_t *input, size_t input_size)

{

sha256_digest(input, input_size, digest);

}

We compile it as a shared library using the -shared and -fPIC options.

crypto-condor will use this library by dlopen-ing it with CFFI, the C

Foreign Function Interface. It searches for functions starting with the prefix

CC using lief, and looks whether the next part of the

function name matches a supported primitive. If it does, the module is imported

and its function to test harnesses is called to run the harness function with

the corresponding test vectors.

Testing output

Finally, there is one more way of testing an implementation. Sometimes, we don't have access to the source code, or modifying it to test with either a wrapper or harness can prove to be challenging. In this case, as long as we can capture inputs passed to the implementation and the outputs it returns, we can compare them with another implementation.

For this, the user writes the values in hexadecimal in a plaintext file. The

file is passed to crypto-condor through the CLI, the inputs run through an

"internal" implementation, often from well-known cryptography libraries

PyCryptodome and pyca/cryptography, and the outputs are compared to those

recorded.

Let's use hashlib once more. We start by hashing a message and recording both

the message and the hash in hexadecimal, separated by forward slashes. Note that

the order is important.

import hashlib

message = bytes.fromhex("424242")

digest = hashlib.sha256(message).digest()

with open("sha256_output.txt", "w") as file:

file.write(f"{message.hex()}/{digest.hex()}\n")

Executing this snippet results in a .txt file containing the string:

424242/dcdb704109a454784b81229d2b05f368692e758bfa33cb61d04c1b93791b0273

We then pass it to crypto-condor with the test output command:

$ crypto-condor-cli test output SHA sha256_output.txt SHA-256

[ -- snip -- ]

╭───────────────────────────── Results summary ─────────────────────────────╮

│ Primitives tested: SHA │

│ Module: SHA │

│ Function: verify_file_sha │

│ Description: Verifies the output of an implementation │

│ Arguments: │

│ filename = sha256_output.txt │

│ hash_algorithm = SHA-256 │

│ Valid tests: │

│ Passed: 1 │

│ UserInput: 1 │

│ Failed: 0 │

│ Flag notes: │

│ UserInput: User-provided·vectors. │

╰────────────────── crypto-condor 2024.08.23 by Quarkslab ──────────────────╯

Non-deterministic primitives

While ECDSA has a deterministic version (see RFC 6979), the original version generates a random nonce for each signature, meaning that signing the same message with the same key twice should result in two different signatures4.

In this case, the test vectors only contain the inputs, as the outputs will

vary. To test an implementation, we compare its behaviour with that of

the aforementioned libraries. For example, we use the test vectors to generate

ECDSA signatures, and use cryptography's implementation to check whether the

signatures are valid for the given key pair and message.

Testing the output of RNGs

We use TestU01, a C library "for the empirical statistical testing of uniform random number generators". While it is known for its Crush battery of tests, it implements many statistical tests, such as those listed by SP 800-22, which is the battery of tests we are interested in.

The battery is not directly implemented in TestU01, but colleagues of mine had

written the necessary integration, and simplified the compilation and usage by

providing a couple of bash scripts. This is the version that is currently

bundled with and exposed by crypto-condor: it is packaged with the tool,

compiled on the user's machine on first use, and made easily accessible from the

command-line interface.

$ head -c 1M < /dev/urandom > random.bin

$ crypto-condor-cli testu01 random.bin

[-- snip --]

╭───────────────────────────── Results summary ─────────────────────────────╮

│ Primitives tested: TestU01 │

│ Module: TestU01 │

│ Function: test_file │

│ Description: Tests the output of a PRNG with TestU01. │

│ Arguments: │

│ filename = random.bin │

│ bit_count = 8388608 │

│ Valid tests: │

│ Passed: 28 │

│ TestU01: 28 │

│ Failed: 0 │

│ Flag notes: │

│ TestU01: TestU01 test │

╰────────────────── crypto-condor 2024.08.23 by Quarkslab ──────────────────╯

Since TestU01 provides empirical statistical testing of the uniformity of RNG

output, it can be used for other cases: for example, we can test ECDSA keys for

a possible bias, as does the test_key_pair_gen function of the ECDSA

module.

Playing with crypto-condor

What better way to get your hands on a new tool than an exercise?

During development and for demonstrations of the tool, we have used CRY.ME, "a flawed messaging application for educational purposes". Developed by ANSSI and CryptoExperts, it's a messaging application based on Matrix, in which cryptographic vulnerabilities have been purposefully introduced as a security challenge. Particularly, its implementations of AES and SHA3-256 are not compliant.

The challenge then is to show that they are indeed not compliant. For that, we will use the C wrappers for AES and SHA.

Start by installing crypto-condor. It is available on PyPI:

python -m pip install crypto-condor

Then clone the CRY.ME repo. The relevant implementations can be found in

cryme_app/element/olm-sdk/sources/lib/crypto-algorithms, but the .c files

are annotated with the vulnerabilities. The easiest way to avoid spoilers is to

cd into cryme_app and execute make app_bundle_src, then look for

olm-sdk/sources/lib/crypto-algorithms. Otherwise, look for the same path

inside cryme_app/element.

The idea is to read the header files to learn how to use the implementations and call them inside the wrappers. The command to compile will look like this:

gcc -o sha_wrapper sha_wrapper.c <other files>

To run the wrapper, check the test wrapper command:

crypto-condor-cli test wrapper SHA --help

Note that the CBC mode in the AES implementation corresponds to CBC-PKCS7 in

crypto-condor, as it uses padding.

A possible solution can be found in our repo. We also demonstrated this use-case in our presentations at SSTIC (in French) and Pass the SALT (in English).

Conclusion

In this blog post, we have presented crypto-condor, a compliance test suite

for cryptographic primitives. The code is open-source under the Apache 2.0

license, and is hosted on GitHub,

along with its

documentation.

We currently use it for our audits: there is a blog post coming soon™, accompanying the public report of the corresponding mission.

crypto-condor was also presented at SSTIC and Pass the SALT. Recordings and

slides are available on the respective sites:

SSTIC

(in French) and Pass the

SALT

(in English).

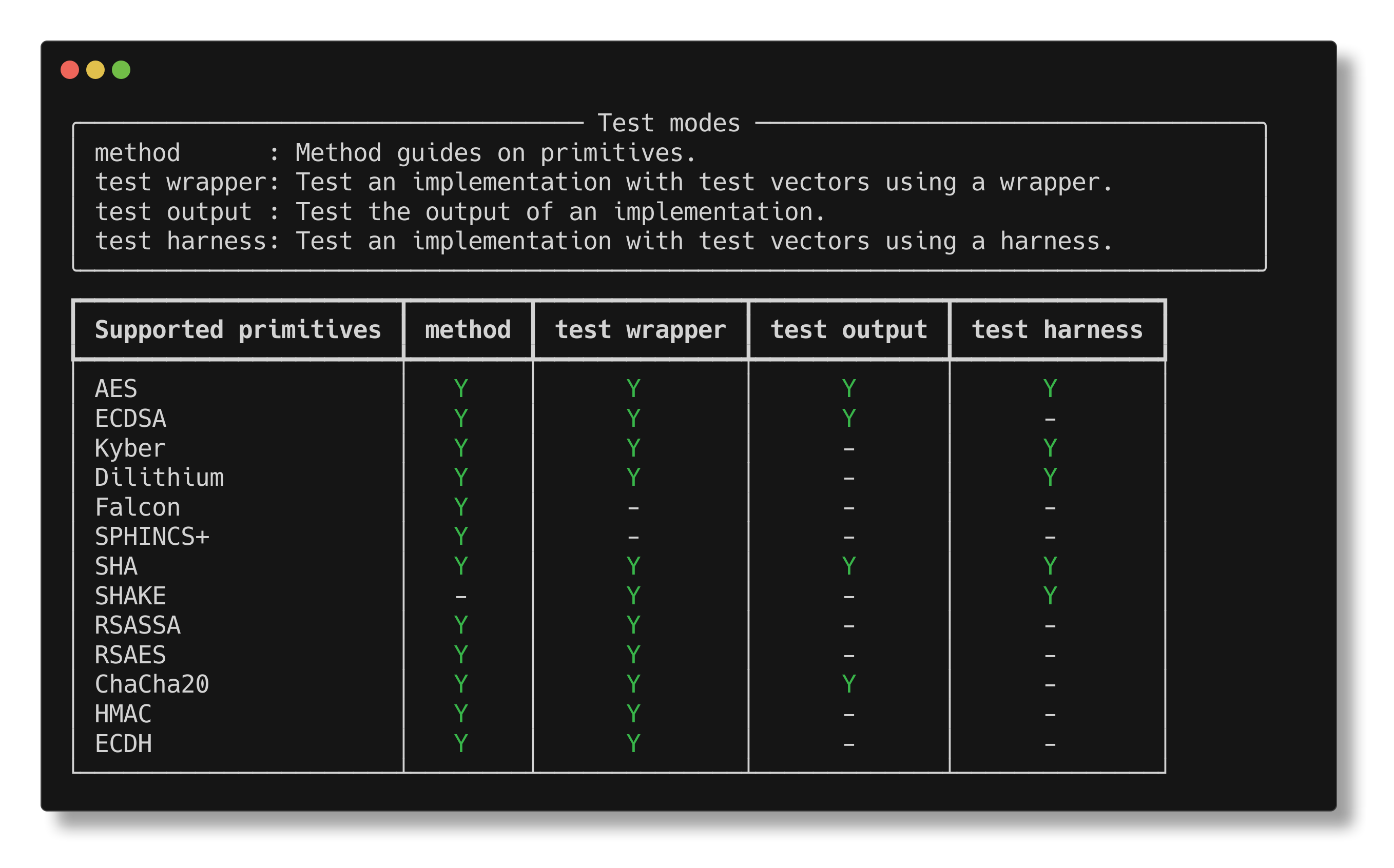

Supported primitives and their testing modes

A roadmap is soon to be shared on the new features or next primitives. In the meantime, feedback and contributions are very much welcome!

Acknowledgements

I want to thank 🍪Dahmun🍪 and 🌸Angèle🌸, my internship mentors, for their guidance and support, and Quarkslab for the great experience I had.

And thanks to Dahmun, Angèle, Sami, and Philippe for proofreading!

If you would like to learn more about our security audits and explore how we can help you, get in touch with us!

如有侵权请联系:admin#unsafe.sh