Authors:

(1) Chengrun Yang, Google DeepMind and Equal contribution;

(2) Xuezhi Wang, Google DeepMind;

(3) Yifeng Lu, Google DeepMind;

(4) Hanxiao Liu, Google DeepMind;

(5) Quoc V. Le, Google DeepMind;

(6) Denny Zhou, Google DeepMind;

(7) Xinyun Chen, Google DeepMind and Equal contribution.

Table of Links

2 Opro: Llm as the Optimizer and 2.1 Desirables of Optimization by Llms

3 Motivating Example: Mathematical Optimization and 3.1 Linear Regression

3.2 Traveling Salesman Problem (TSP)

4 Application: Prompt Optimization and 4.1 Problem Setup

5 Prompt Optimization Experiments and 5.1 Evaluation Setup

5.4 Overfitting Analysis in Prompt Optimization and 5.5 Comparison with Evoprompt

7 Conclusion, Acknowledgments and References

B Prompting Formats for Scorer Llm

C Meta-Prompts and C.1 Meta-Prompt for Math Optimization

C.2 Meta-Prompt for Prompt Optimization

D Prompt Optimization Curves on the Remaining Bbh Tasks

E Prompt Optimization on Bbh Tasks – Tabulated Accuracies and Found Instructions

A SOME FAILURE CASES

Although LLMs show the power of optimizing basic math problems (Section 3) and prompts (Section 4), we see some limitations across all optimizer LLMs that may impede their power of solving more challenging problems. These limitations include:

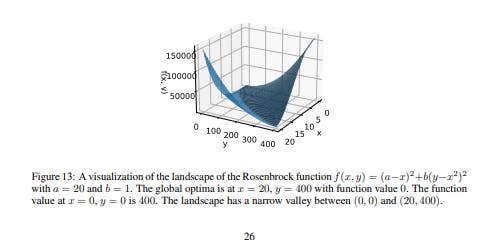

• Hallucinating the values that need to come from math calculation: The optimizer LLMs often output contents like “the function value at (5, 3) is 15” despite that the true value is not 15. The model will get it right if external tools that can reliably calculate the value are triggered. When and how to trigger such tool use cases remains an interesting topic (see e.g., (Schick et al., 2023; Cai et al., 2023)).

• Generating solutions already appeared in context even if we tell it to "Give me a new (w, b) pair that is different from all pairs above": the optimizer LLMs do not 100% reliably follow this instruction even if its own outputs often include sentences like “I will provide a new pair that is different”, making the output self-contradictory. The output is almost guaranteed to be different from in-context old solutions when the model output contains a comparison of the new pair and all old pairs, though. Thus (implicitly) triggering such behaviors may be a solution. How to implement this feature without harming the instruction following performance of other parts remains an interesting topic to study.

• In black-box math optimization, getting stuck at a point that is neither global nor local optimal: This often occurs in two linear regression cases: (a) The in-context exemplars all share the same w or b that is different from wtrue or btrue. This case is more likely to be avoided when a larger number of past solutions are included in the meta-prompt; (b) one or several of the best previous solutions in the meta-prompt have ws and bs in quantitatively opposite directions from the global optima wtrue and btrue: for example, the ws are all smaller than wtrue while the bs are all larger than btrue. Since the optimizer model often proposes to only increase w or decrease b when the past solutions in meta-prompt share w or b, the optimization will get stuck if either increasing w or decreasing b would increase the objective value. This issue is mitigated by sampling multiple new solutions (thus more exploration) at each step.

如有侵权请联系:admin#unsafe.sh