2024-9-30 09:23:0 Author: hackernoon.com(查看原文) 阅读量:3 收藏

Authors:

(1) Seokil Ham, KAIST;

(2) Jungwuk Park, KAIST;

(3) Dong-Jun Han, Purdue University;

(4) Jaekyun Moon, KAIST.

Table of Links

3. Proposed NEO-KD Algorithm and 3.1 Problem Setup: Adversarial Training in Multi-Exit Networks

4. Experiments and 4.1 Experimental Setup

4.2. Main Experimental Results

4.3. Ablation Studies and Discussions

5. Conclusion, Acknowledgement and References

B. Clean Test Accuracy and C. Adversarial Training via Average Attack

E. Discussions on Performance Degradation at Later Exits

F. Comparison with Recent Defense Methods for Single-Exit Networks

G. Comparison with SKD and ARD and H. Implementations of Stronger Attacker Algorithms

F Comparison with Recent Defense Methods for Single-Exit Networks

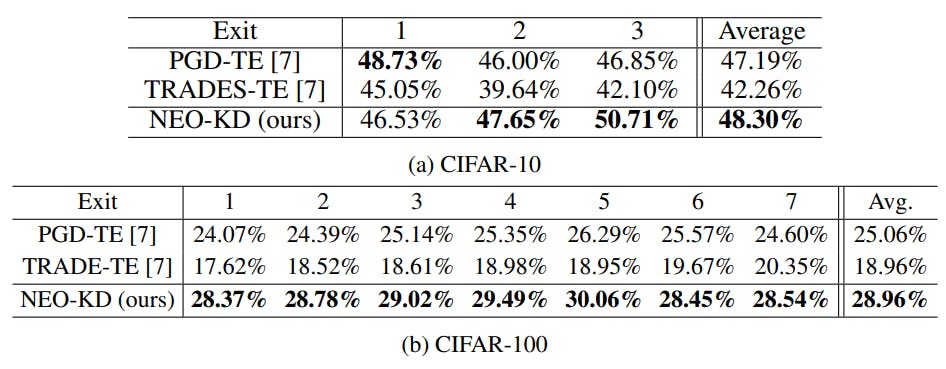

The baselines in the main paper were generally the adversarial defense methods designed for multi-exit networks. In this section, we conduct additional experiments with a recent defense method, TEAT [7], and compare with our method. Since TEAT was originally designed for the single-exit network, we first adapt TEAT to the multi-exit network setting. Instead of the original TEAT that generates the adversarial examples considering the final output of the network, we modify TEAT to generate adversarial examples that maximizes the average loss of all exits in the multi-exit network. Table A3 below shows the results using max-average attack on CIFAR-10/100. It can be seen that our NEO-KD, which is designed for multi-exit networks, achieves higher adversarial test accuracy compared to the TEAT methods (PGD-TE and TRADES-TE) designed for single-exit networks. The results highlight the necessity of developing adversarial defense techniques geared to multi-exit networks rather than adapting general defense methods used for single-exit networks.

如有侵权请联系:admin#unsafe.sh