Following a brief introduction to differential fuzzing, this blog post reviews the leading tools that leverage it for testing cryptographic primitives. In the second half, we present a method for creating a differential fuzzer along with the results we obtained.

The topic presented in this article is the one I tackled during my end-of-study internship.

Introduction

Testing cryptographic implementations is a challenging task. Test vectors can be used to compare the results of every operation against defined standards. For instance, crypto-condor performs these checks automatically and provides a reference implementation to test with other given inputs. However, memory bugs and undefined behaviors triggered by specific inputs may persist. For instance, a bug was discovered in libgcrypt where digests were wrong for MD5, MD4, and RMD160 when the message size is . By leveraging code coverage, a significant amount of computing power and a bit of luck, fuzzing can identify these inputs. Take the best of both worlds and what you get is differential fuzzing. This approach has demonstrated high effectiveness in bug discovery, as illustrated by the comprehensive list of bugs found by Cryptofuzz. Additionally, it demands low effort if you are already acquainted with fuzzing, as shown in Earn $200K by fuzzing for a weekend: Part 1.

What is Differential Fuzzing?

Differential fuzzing is a fuzzing strategy where two or more similar implementations are provided with the same input and the outputs are compared to find discrepancies.

Usually, fuzzing detects memory bugs, crashes, and undefined behaviors, using sanitizers. The output comparison lets the fuzzer find new types of bugs like logic bugs and incorrect computations. It could for example find CVE-2022-2097 in OpenSSL where under some circumstances AES OCB was failing to encrypt some bytes due to a jb (jump if below) instruction used instead of a jbe (jump if below or equal) for $inp == $len check. Differential fuzzing can also be adapted to identify side channel problems, as explained in the section Finding side channel.

How it works

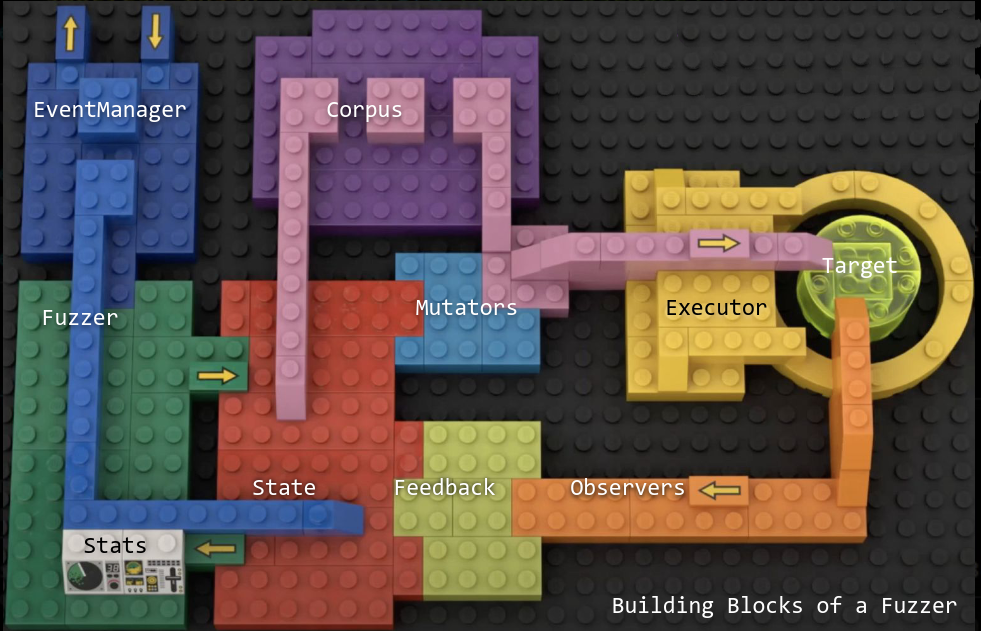

Let's give a quick reminder about how fuzzing works. First, you must feed the fuzzer with a corpus of inputs. The fuzzer then iterates over this corpus. At every iteration, it chooses an input and mutates it. Then this input is given to the program under test via a harness. If the program crashes, the fuzzer reports the crash associated with the input. Optionally, sanitizers can be added to watch the execution and provide feedback. As a picture is worth a thousand words, see Picture 1 for a summary diagram.

Picture 1: Fuzzing overview

Picture 2: Differential fuzzing overview

Now let's dive into differential fuzzing. As shown in Picture 2, the main addition is that there is a new way to report a bug. Note that more than two programs can be tested at the same time. It is more efficient than fuzzing by pairs and it lowers the probability of missing a bug which happens in multiple programs. This may occur if some implementations under test share bits of code for instance. Moreover, the same code can be tested with different modifiers that must still produce the same output. For example, a modifier could be a boolean stating if encryption must be performed in place. Another subtlety is whether the fuzzer should gather the code coverage of every program or only one. Existing tools opt for the former, as an input may explore a new execution path in only one of the programs under test (and still produce the same output). For example, if optimizations are done only for some class of inputs, such inputs should be retained as they are valuable for detecting discrepancies.

Additionally, if a program under test does not implement some functionalities, the harness can return a not comparable output that is ignored by the fuzzer. This mechanism is useful as well if a result is nondeterministic due to certain modifiers used in the input.

Timeline of differential fuzzing in crypto

The first tool that leveraged differential fuzzing in cryptography may be CDF (for Crypto Differential Fuzzing) which was presented at Black Hat USA 2017. However, the inputs are generated using hand-crafted rules, such as test every message length in this range or test point (0,0). As a result, outcomes are close to those achieved with the test-vector approach. A new use-case has been introduced by DifFuzz: finding side-channel weaknesses. The first differential fuzzing tool using mutations and code coverage to find cryptographic vulnerabilities is certainly Cryptofuzz. It has discovered numerous bugs by comparing operations of dozens of cryptographic libraries. On the other hand, for cryptocurrencies, Beaconfuzz_v2 compares eth2 client implementations. The recent paper CLFuzz: Vulnerability Detection of Cryptographic Algorithm Implementation via Semantic-aware Fuzzing is looking to improve the input generation of Cryptofuzz by using different strategies for every input type.

Technical challenges

Input selection

Most differential fuzzing tools are coverage-guided, which means that they update a map of the portions of code accessed at least once. An input that accesses a new piece of code takes priority over the others. For example, in Cryptofuzz, the fuzzer is built against the libraries under test instrumented with sanitizers.

Alternatively, DifFuzz uses custom metrics to select inputs leading to side-channel vulnerabilities (explained in section Finding side channel vulnerabilities).

Finally, the domain-independent differential fuzzer Nezha is guided by a property called δ-diversity. The first step to calculate this metric is to compute the list of edges accessed in each program for a given input and store it in a tuple . Here, an edge is a transition between two basic blocks of instructions that can be followed at the end of the first basic block's execution. The δ-diversity is then the number of unique tuples generated by the corpus. It summarizes the observed asymmetries between the behaviors of multiple test applications. The goal is to test for inputs that may not cover more code, but lead to semantic bugs. However, comparing the full execution path edges would result in too much overhead. Therefore, Nezha's paper introduces two different approximations:

-

Coarse δ-diversity: Get for each input a tuple of the quantity of edges accessed in each program. The coarse diversity is the number of unique tuples achieved in a corpus of inputs. This diversity isn't increased when an edge is replaced by another in an execution. For instance, and both lead to . Coarse δ-diversity is sometimes not relevant, for instance when a loop is executed once for every character of the input message. In that case, the edges of the loop are accessed times (where is the message length). Thus, every input with a different size leads to a unique tuple. The diversity will then increase rapidly and the corpus will be filled with these inputs. Testing different lengths is a good strategy, but in this case a lot of other inputs will be ignored because they are not unique for this metric.

-

Fine δ-diversity: Get for each input a tuple of edge sets accessed at least once in each program. The fine diversity is the number of unique tuples achieved in a corpus of input. When some edges are accessed multiple times during an execution it doesn't count for diversity. and both lead to .

However, this paper shows results in terms of differences found by input tested but ignores performance considerations. Moreover, it has been tested only up to 100000 inputs and the simulation ends when it seems that Nezha is about to be outperformed. It is unclear if it finds more discrepancies than code coverage in the long run.

Input generation

Cryptofuzz generates structured inputs by using JSON format. Each member corresponds to an argument of the cryptographic operation or a type (for example the digest or cipher to test). The inputs are mainly chosen by picking an arbitrary number of random bytes. However, occasionally Bignum and primes are picked from a list of "interesting" numbers taken from known cryptographic bugs. Here is the code for the digest operation:

switch ( operation ) {

case CF_OPERATION("Digest"):

{

parameters["modifier"] = "";

parameters["cleartext"] = getBuffer(PRNG64() % maxSize);

parameters["digestType"] = getRandomDigest();

cryptofuzz::operation::Digest op(parameters);

op.Serialize(dsOut2);

}

break;

CLFuzz on the other hand has a strategy for every input type (key, nonce, message, cipher...) and uses the correct size according to the tested cipher. However, non-standard inputs must also be tested to cover every edge case. For instance, a buffer overflow in OpenSSL has been found when using a key length longer than 2040 bits for RC5. To find this kind of bug, CDF tests encryption once with every key length up to 2 times the correct one. This can be quickly tested at the beginning of a fuzzing campaign.

Another question is on how to decide between generating a new input and mutating an existing one. Cryptofuzz picks one strategy randomly for every input. On the contrary, CLFuzz surprisingly does not mutate at all, and always produces new inputs (so in this case, the code coverage is ignored).

Combining operation

Combining operations can provide additional verification (also called correctness oracle or cross-check) and help find bugs when the output comparison is not sufficient. For example, edge cases that could be wrong in every implementation tested, or non-deterministic parts. Cryptographic operations often have properties that can be verified. For example and should hold when and only when using the same key/keypair in both operations. These properties are checked in CDF and Cryptofuzz.

Finding side channel vulnerabilities

With a small tweak, differential fuzzing can be used to find side-channel vulnerabilities. The goal is to find inputs that maximize the difference in resource consumption between secret-dependent paths. DifFuzz inputs are sliced into three parts: pub, sec1 and sec2. pub represents all the public parameters that an attacker already has access to. sec1 and sec2 are two versions of the sensitive data that could leak through the side-channel. The cost is user-defined, it can be the execution time for instance. The criteria for identifying promising inputs are the code coverage and the cost difference between the two executions. Refer to Picture 3, which summarizes everything.

Picture 3: Side channel oriented differential fuzzing

Existing limitations

- Fuzzing does not work well with non-deterministic code, and it is even worse for differential fuzzing, which loses all its relevance.

- Current tools confront code from different libraries compiled for the same target, but cryptographic libraries often provide assembly optimizations dependent of architecture and instructions set. Cryptofuzz only tests libraries with and without assembly optimizations. Therefore some parts of the code are not tested. In the case where several libraries are available, you could fuzz separately for every configuration without comparing the result between them but it is still not practical.

- Tools like DifFuzz and Beaconfuzz_v2 are specialized for finding side channels or fuzzing Ethereum contracts so you cannot use them in other contexts. So, for example, if you want to look for side-channel vulnerabilities and bugs at the same time, you need to use two tools. At least, the corpus might be reused with some adaptations in these cases.

- Cryptofuzz is more generic but it is a monolithic project, with no documentation on how to implement a new operation or a new module. The whole code structure is difficult to apprehend, and to implement a new operation, changes have to be made in multiple files (some files are thousands of lines long with one function per operation).

- Current tools are mostly code coverage guided but it is not necessarily the best way to find bugs (covered code can still contain non-triggered bugs). Other metrics like δ-diversity may be more relevant with cryptographic function as a target.

- Cryptofuzz can be used only with targets where the source is available because it compiles the library along the tool and instruments it.

Each of these tools comes with pros and cons, and during this internship we tried to leverage their trade-offs to obtain a more generic tool that can do the best of each of those worlds. We detailed each feature used in differential fuzzing in order to be able to implement them, which is what will be presented in next section.

Differential fuzzing DIY

LibAFL

Given the limitations of existing tools, it would be nice to improve upon them by building our own solution! First we have to write again the classical fuzzing part from scratch. But it is quite long and boring, so maybe we can find a way to avoid this. Have you heard about LibAFL?

To quote its creator, "a library that is not just another fuzzer, but a collection of reusable pieces for individual fuzzers." It is a Rust crate providing multiple building blocks for every part of the fuzzing process: input generation and mutation, execution, scheduling...

Illustration from presentation "Fuzzers like LEGO" at RC3 2020 by Andrea Fioraldi & Dominik Maier

Some of the features it provides are quite interesting. The event manager uses the LLMP protocol to scale the fuzzing almost linearly across multiple cores and different hosts. A broker is at the center of this protocol, it gathers and broadcasts new interesting inputs. It also collects the fuzzing statistics from every client and prints them to the user.

Another interesting point is that reusing the same bricks across multiple projects allows us to compare projects more easily by seeing what they share in common and where they differ.

Differential fuzzing

To do differential fuzzing with LibAFL, we need to gather the results of the harnesses and compare them. The result of this comparison will then be used as a feedback to report any discrepancies. The component running the harness is called Executor. Fortunately, a differential executor is provided for differential fuzzing. It wraps two executors (one for each target) and combines the observations made by the observers. The baby_fuzzer_swap_differential example from LibAFL provides a basic usage of this DiffExecutor.

However, if you want to compare more than two targets at once, you will have to either stack multiple DiffExecutor or create a custom one implementing the Executor trait.

Custom input

Now, as we have seen in the input generation section, having structured input can enhance the fuzzer's capabilities. In LibAFL the default input type is BytesInput which is a byte vector. But we can define our own input type, the only restriction is that it should implement the Input trait. Be aware that this means that your input must be serializable and deserializable (the fuzzer may have to store it on files).

The executor must be generic, so it operates without knowing the input type and you don't have to modify it. On the other hand, you can create custom Generator and Mutator that fit your input type and access all the data stored in your input from the harness.

Results

With enough time and dedication, you should end up with a tool capable of easily testing all kinds of cryptographic code by following the previous guidelines. This work has been done during an internship at Quarkslab, hence the second part of this blog post is about the results that we achieved with our differential fuzzer. This tool has not been published yet, but we may open-source it later.

Performance

First, let us speak about the performance achieved and do a comparison against a similar tool: Cryptofuzz. The targets we used for this benchmark are the symmetric encryption algorithms from OpenSSL. We added some debug print to Cryptofuzz so that we get multiple metrics:

- inputs generated: Total number of inputs generated by the fuzzer.

- harness calls: Number of times the OpenSSL harness for symmetric encryption has been called. It is greater than the number of valid inputs because in Cryptofuzz one input can lead to multiple tests with different modifiers.

- valid inputs: Number of inputs with a correct structure. It increases when the fuzzer executor doesn't crash while unpacking the data and goes through the whole differential fuzzing process.

- successful inputs: Number of harness calls that led to the actual encryption of a message.

The conditions of the test were the following: - 1 core - running for 2 hours (on the long term, the conclusion are the same) - with ASAN sanitizer - without any input corpus

Here are the results:

| Cryptofuzz | Our tool | Comparison | |

|---|---|---|---|

| Inputs generated | 12 700 000 | 6 435 824 | - 49% |

| Harness calls | 1 553 869 (12%) | 6 435 824 (100%) | + 314% |

| Valid inputs | 1 021 481 (8%) | 6 435 824 (100%) | + 530% |

| Successful inputs | 375 548 (3%) | 3 150 000 (49%) | + 739% |

The most surprising result of this benchmark is that 92% of inputs generated by Cryptofuzz are invalid. We think that this is due to how these inputs are mutated. The structure of every part of an input in Cryptofuzz is length | data. But the mutations are done by libFuzzer which does not know how the input is formatted. So if the length part is mutated, most of the time the unpacking of the input fails and the target harness is never called.

At first look, you may think that our tool provides a slow execution speed compared to Cryptofuzz. But be aware that the execution of the target (i.e. the encryption process) is the part that takes the most time during fuzzing. Note that our fuzzer tests input messages of length up to 65536 bytes. During testing, we even noticed that at the beginning our tool was faster - around 4200 exec/sec - but as soon as the inputs became mostly successful thanks to the code coverage, the executions per second decreased to around 1000. For comparison, Cryptofuzz is around 1900 on average.

Then you may wonder why we don't compare the most important thing for a fuzzer: the code coverage. First, we cannot compare the number of edges covered because in Cryptofuzz the fuzzer is part of the target from libFuzzer's point of view. For example, code coverage is taken on the code making the result comparison. Second, the coverage depends mainly on how the input is generated and mutated, which is defined by the user in our fuzzer. So, a user could replicate the exact behavior of Cryptofuzz if he wanted to obtain the same results. Also, it is possible to achieve 100% successful inputs by generating only valid inputs (correct key size, tag size, ...). But we choose not to do so because it would increase the fuzzer's complexity and the checks done by the target would not be tested anymore.

Bugs found

We tested our tool on two open-source projects containing cryptographic implementations. As a result, we discovered a buffer over-read vulnerability and two bugs leading to a verification failure. Reporting is in progress, so we cannot share more details.

In the end, we noticed that the bugs found emphasize that the quality of the input generation and the design of the harness are as important as the efficiency of the fuzzer. For a successful fuzzing campaign, your input generation and harness should restrict the test range to the one the target can handle, while still testing edge cases.

Acknowledgment

Particular thanks to Dahmun Goudarzi for supervising my internship and to Jeremy Marchand for helping me during my research about fuzzing and LibAFL. Being in Quarkslab's unique R&D environment allowed me to conclude my studies nicely by creating my own tool. Hopefully, we will continue to find a lot of bugs with it!

Conclusion

While state-of-the-art cryptographic fuzzing tools offer interesting capabilities, they are often overly specialized and challenging to use. Thanks to the LibAFL fuzzing library, we were able to develop a modular differential fuzzer capable of discovering various types of bugs in a wide range of cryptographic implementations.

If you would like to learn more about our security audits and explore how we can help you, get in touch with us!

如有侵权请联系:admin#unsafe.sh