2024-10-17 03:0:20 Author: hackernoon.com(查看原文) 阅读量:4 收藏

Authors:

(1) Haleh Hayati, Department of Mechanical Engineering, Dynamics and Control Group, Eindhoven University of Technology, The Netherlands;

(2) Nathan van de Wouw, Department of Mechanical Engineering, Dynamics and Control Group, Eindhoven University of Technology, The Netherlands;

(3) Carlos Murguia, Department of Mechanical Engineering, Dynamics and Control Group, Eindhoven University of Technology, The Netherlands, and with the School of Electrical Engineering and Robotics, Queensland University of Technology, Brisbane, Australia.

Table of Links

General Guidelines for Implementation

IV. PRIVACY GUARANTEES

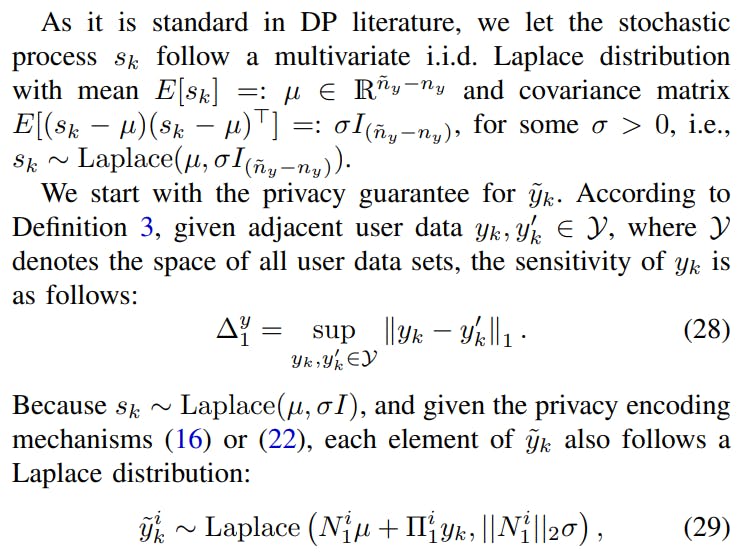

A privacy-level analysis of random affine maps of the form (7) has been discussed in the literature, see [33] (cryptanalysis) and [34] (DP). However, the guarantees they show are for square and invertible encoding matrices. In our scheme, we cannot directly apply those results as our encoding matrices (Π1 and Π3) are rectangular (tall matrices). We need rectangular matrices to be able to exploit their kernels to remove the injected distortion at the user side. A complication with this architecture is that the covariance matrix of N1sk (which is part of the user data encoding mechanism) is degenerate regardless of the probability distribution of sk. The latter prevents us from using off-the-shelf tools to design the affine maps to guarantee differential privacy.

In what follows, we provide a tailored solution to guarantee DP for the class of mechanisms that we consider. In particular, we prove that the proposed scheme, with full-column rank encoding matrices, can provide any desired level of differential privacy without reducing the accuracy and performance of the original algorithm.

A. Differential Privacy

Definition 1 [Adjacency] Let X denote the space of all possible datasets. We say that D ∈ X and D′ ∈ X are adjacent if they differ on a single element.

Definition 2 [(ϵ, δ)-Differential Privacy [35]] The random mechanism M : X → R with domain X and range R is said to provide (ϵ, δ)-differential privacy, if for any two adjacent datasets D, D′ ∈ X and for all measurable sets S ⊆ R:

If δ = 0, M is said to satisfy ϵ-differential privacy. From Definition 2, we have that a mechanism provides DP if its probability distribution satisfies (25) for some ϵ and δ. Then, if we seek to design the mechanism to guarantee DP, we need to shape its probability distribution. This is usually done by injecting noise into the data we seek to encode. The noise statistics must be designed in terms of the sensitivity of the data to be encoded. Sensitivity refers to the maximum change of the data due to the difference in a single element of the dataset.

Definition 3 (Sensitivity) : Given two adjacent datasets D, D′ ∈ X , and a query function q : X → R (a deterministic function of datasets). The sensitivity of q(·) is formulated as

A differential privacy mechanism M based on the sensitivity (26) needs to be designed so that the differential privacy condition (25) holds.

B. Immersion-based Coding Differential Privacy Guarantee

Solution to Problem 3

where (a) follows from the following triangle inequality

Due to the sensitivity relation (28), we have

Hence, inequality (30) implies

C. Perfect Security

A perfectly secure cryptosystem is a secure system against adversaries with unlimited computing resources and time, see [36]. In [37], Shannon proves that a necessary condition encryption method to be perfectly secure is that the uncertainty of the secret key is larger than or equal to the uncertainty of the plaintext, see [38]. He proposes a one-time pad encryption scheme in which the key is randomly selected and never used again. The one-time pad gives unbounded entropy of the key space, i.e., infinite key space, which provides perfect security. Perfect secrecy is lost when the key is not random or if it is reused. This perfect security idea can be used to assess the performance of our proposed coding mechanism. Given the fact that in the proposed mechanism, (7), the encoding keys, (Π1, sk) and (Π3, Π4N1sk), are random, change in every iteration, and provide an infinite-dimensional key space (the support of sk), can be considered perfectly secure.

Perfect secrecy of the proposed coding mechanisms can also be concluded from the differential privacy point of view. In [39], the authors define perfect secrecy in terms of DP. They state that a mechanism M : X → R, with domain X and range R is perfectly secret if

for any two adjacent D, D′ ∈ X and for all measurable sets S ⊆ R. Then, the output of the mechanism reveals no information about private data. Comparing the definition of perfect secrecy in (37) and the definition of differential privacy (Definition 2), we can conclude that a differentially private mechanism is perfectly secret if (ϵ, δ) equal zero.

如有侵权请联系:admin#unsafe.sh