2024-10-18 23:50:35 Author: hackernoon.com(查看原文) 阅读量:1 收藏

Table of Links

2 Architectural details and 2.1 Sparse Mixture of Experts

3.1 Multilingual benchmarks, 3.2 Long range performance, and 3.3 Bias Benchmarks

6 Conclusion, Acknowledgements, and References

2 Architectural details

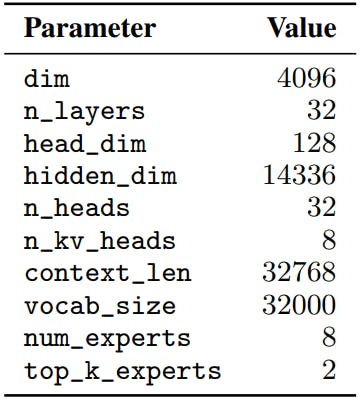

Mixtral is based on a transformer architecture [31] and uses the same modifications as described in [18], with the notable exceptions that Mixtral supports a fully dense context length of 32k tokens, and the feedforward blocks are replaced by Mixture-of-Expert layers (Section 2.1). The model architecture parameters are summarized in Table 1.

2.1 Sparse Mixture of Experts

We present a brief overview of the Mixture of Experts layer (Figure 1). For a more in-depth overview, see [12]. The output of the MoE module for a given input x is determined by the weighted sum of the outputs of the expert networks, where the weights are given by the gating network’s output. i.e. given n expert networks {E0, Ei , ..., En−1}, the output of the expert layer is given by:

MoE layers can be run efficiently on single GPUs with high performance specialized kernels. For example, Megablocks [13] casts the feed-forward network (FFN) operations of the MoE layer as large sparse matrix multiplications, significantly enhancing the execution speed and naturally handling cases where different experts get a variable number of tokens assigned to them. Moreover, the MoE layer can be distributed to multiple GPUs through standard Model Parallelism techniques, and through a particular kind of partitioning strategy called Expert Parallelism (EP) [28]. During the MoE layer’s execution, tokens meant to be processed by a specific expert are routed to the corresponding GPU for processing, and the expert’s output is returned to the original token location. Note that EP introduces challenges in load balancing, as it is essential to distribute the workload evenly across the GPUs to prevent overloading individual GPUs or hitting computational bottlenecks.

This formulation is similar to the GShard architecture [21], with the exceptions that we replace all FFN sub-blocks by MoE layers while GShard replaces every other block, and that GShard uses a more elaborate gating strategy for the second expert assigned to each token.

[1] https://mistral.ai/news/mixtral-of-experts/

Authors:

(1) Albert Q. Jiang;

(2) Alexandre Sablayrolles;

(3) Antoine Roux;

(4) Arthur Mensch;

(5) Blanche Savary;

(6) Chris Bamford;

(7) Devendra Singh Chaplot;

(8) Diego de las Casas;

(9) Emma Bou Hanna;

(10) Florian Bressand;

(11) Gianna Lengyel;

(12) Guillaume Bour;

(13) Guillaume Lample;

(14) Lélio Renard Lavaud;

(15) Lucile Saulnier;

(16) Marie-Anne Lachaux;

(17) Pierre Stock;

(18) Sandeep Subramanian;

(19) Sophia Yang;

(20) Szymon Antoniak;

(21) Teven Le Scao;

(22) Théophile Gervet;

(23) Thibaut Lavril;

(24) Thomas Wang;

(25) Timothée Lacroix;

(26) William El Sayed.

如有侵权请联系:admin#unsafe.sh