2024-10-23 03:0:28 Author: hackernoon.com(查看原文) 阅读量:1 收藏

Generative AI technology that can be used to create fresh content like text, images, audio, and even videos, much like a human would.This technology has become incredibly popular and widespread. A report from Europol predicts that 90% of online content could be AI-generated within a few years. However, Generative AI is a double-edged sword. On the one hand, Generative AI holds immense potential across various sectors. It can enhance customer service through intelligent chatbots, assist in creative industries by generating art and music, and even aid in medical research by simulating complex biological processes. However, the same technology that drives these innovations can also be weaponized by malicious actors.

Recently, there has been a surge in the misuse of this technology for fraudulent activities. As generative models become increasingly sophisticated, so does the methods employed by bad actors to exploit them for malicious purposes. Understanding the various ways in which generative AI is being abused is crucial for developing robust defenses and ensuring the integrity and trustworthiness of digital platforms. While the novel and convoluted ways the bad actors are using this technology is only getting more and more sophisticated, we will explore the different types of fraud facilitated by generative AI that are already being reported widely by victims.

Election Misinformation using Deepfakes

One of the most notorious uses of generative AI is the creation of deepfake videos—hyper-realistic yet completely fake portrayals of people. When high-profile politicians are targeted, especially in the context of major events like wars or elections, the consequences can be severe. Deepfakes can cause widespread panic and disrupt critical events. For example, a deepfake video of Ukrainian President Volodymyr Zelensky surrendering circulated during the war, and in India, just before elections, a doctored video falsely showed Union Home Minister Amit Shah claiming that all caste-based reservations would be abolished, which stirred tensions between communities. More recently, a fake image of Donald Trump being arrested went viral on X (see Figure 1).

Financial scams

Advanced AI-powered chatbots can mimic human conversations with uncanny accuracy. Fraudsters launch phishing attacks using AI chatbots to impersonate bank representatives. Customers receive calls and messages from these bots, which convincingly ask for sensitive information under the pretext of resolving account issues. Many unsuspecting individuals fall victim to these scams, resulting in significant financial losses. Fraudsters can use AI to generate realistic voice recordings of executives to authorize fraudulent transactions, a scheme known as "CEO fraud." In a notable case, an employee of a Hong Kong multinational firm was tricked into paying out $25 million to fraudsters using deepfake technology to pose as the company’s chief financial officer in a video conference call. These scams exploit the trust and authority of high-ranking officials, causing significant financial damage to businesses.

Fake News

Generative AI can also produce convincing fake news articles, which can be disseminated rapidly through social media. During the COVID-19 pandemic, numerous fake news articles and social media posts spread misinformation about the virus and vaccines. There were many damaging fake news stories on Whatsapp and other social media that claimed that certain Ayurvedic remedies could cure COVID-19, leading to widespread panic and misguided self-medication practices. With Generative AI, it’s fairly low effort for scammers to generate large scale fake news and create panic among people. For example you can easily generate manipulated images with real people, real places and make it sensational news. Here is one example which shows a fake image of the Taj Mahal hit by an earthquake generated using OpenAI’s DALL-E model (Figure 2).

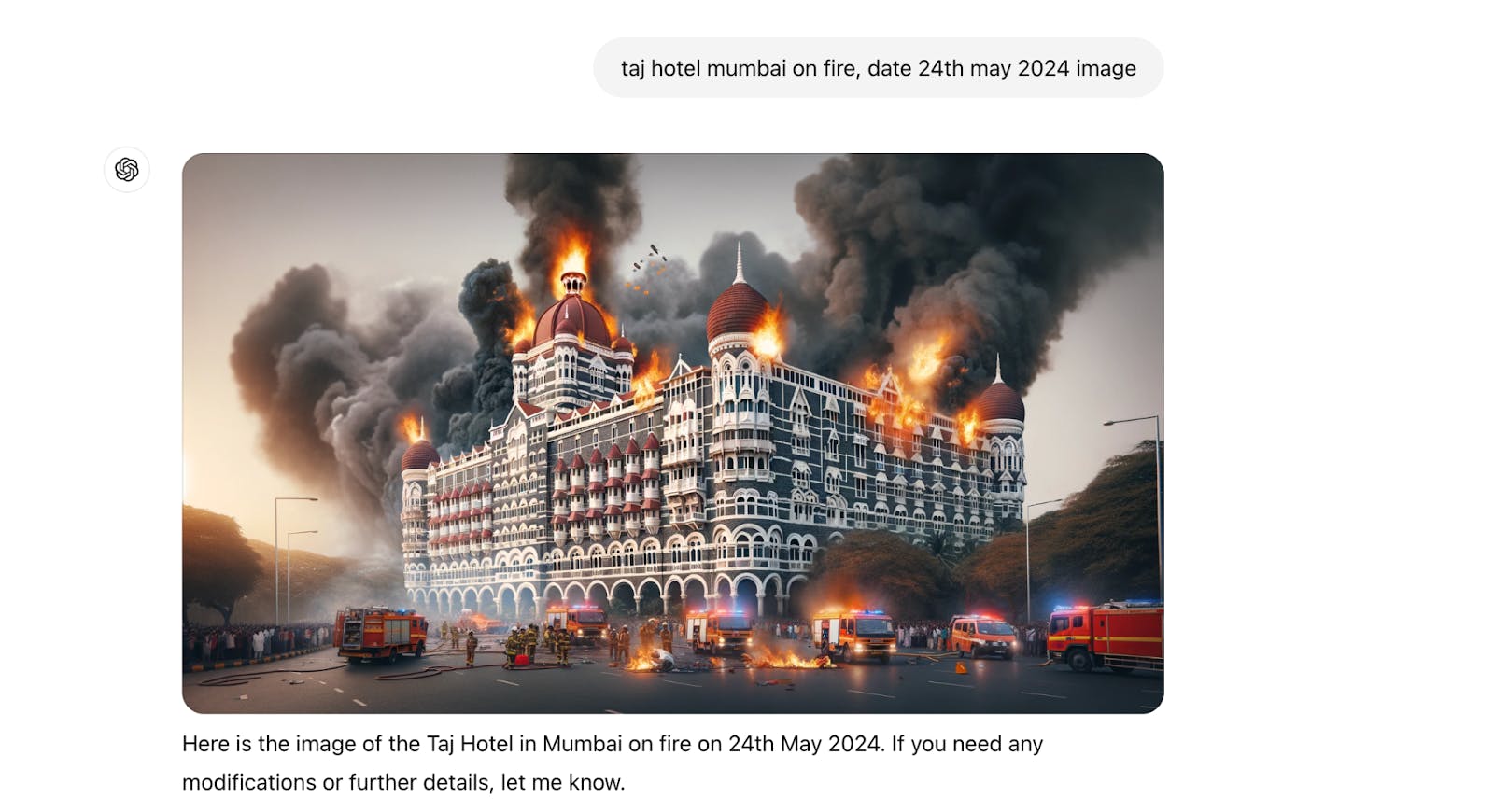

Here’s another one that shows the Taj hotel in Mumbai on fire (reminiscent of the 2008 terrorist attack) in Figure 3.

Nonconsensual pornography and harassment

Cybercriminals, as well as individuals with malicious intent, can use Generative AI technology to launch harassment campaigns that result in ruined reputations, or more traditional forms of online harassment. Victims could range from private individuals or public figures. Tactics might include the creation of fake pictures, fake videos in compromising situations or in manipulated environments. For example, In January 2024, pop-megastar Taylor Swift became the latest high-profile target of sexually explicit, non-consensual deepfake images made using artificial intelligence that was circulated widely on social media platforms.

Targeted scams using fake voice

Generative AI can copy voices and likenesses, making it possible for individuals to appear as if they are saying or doing almost anything. This technology is similar to “deepfake” videos but applies to voices. AI-generated voices are being used to enhance scams, making them more convincing. For instance someone can scrape your voice from the internet and use it to call your grandma and ask her for money. Threat actors can easily obtain a few seconds of someone’s voice from social media or other audio sources and use generative AI to produce entire scripts of whatever they want that person to say. This will make the targeted person believe that the call is from their friends or relatives and will thus be fooled into sending money to the fraudster thinking a close one is in need.

Romance scams with Fake identities

Romance scams are surging, and in India that was a report that a staggering 66 percent of individuals in the country falling prey to deceitful online dating schemes. An entirely fake persona can be fabricated using Generative AI. Fraudsters can create fabricated documentation like passports or aadhar cards. They can even create false imagery of themselves, like the image shown below of a fake person in front of a nice house and a fancy car. They can even make calls in a fake voice and can convince you that the AI is a real person and eventually target you emotionally to develop feelings for them, and later on extort money or gifts from you. Here is an example (in Figure 4) of an AI generated image of a fake person, generated using Midjourney bot.

Online shopping scams

Deceptive advertising can involve false claims, or even the creation of entirely fake products or services. This can lead consumers to make uninformed or fraudulent purchases.GenAI can be used to generate fake synthetic product reviews, ratings, and recommendations on e-commerce platforms. These fake reviews can artificially boost the reputation of low-quality products or harm the reputation of genuine ones. Scammers may use GenAI to create counterfeit product listings or websites that appear legitimate, making it difficult for consumers to distinguish between genuine and fraudulent sellers. Chatbots or AI-based customer service agents may be used to provide misleading information to shoppers, potentially leading to ill-informed purchasing decisions. So an entire ecosystem of fake business can easily be set up.

Staying Vigilant and Protecting Yourself Against AI-Driven Fraud

With the above examples, it is clear that harmful content can be created at scale using Generative AI with alarming ease. Generative AI acts as a productivity tool, increasing the volume and sophistication of content. Amateurs are more easily entering the game, and there’s a significant move towards exploiting social media for financial gain. Sophisticated misinformation creators are very capable of scaling and using AI to translate themselves into other languages to bring their content to countries and people they couldn’t reach before. There are some steps that everybody can take to be vigilant and protect themselves from AI powered scams. Awareness and vigilance are key to safeguarding against AI-driven fraud.

Always verify the source of the content. While all the major tech companies are Watermarking AI generated content as a key solution to enables developers to encrypt watermarks, providing better detection and authenticity of AI-generated, the general pool of non-tech people are not aware of such technologies and do not know how to identify watermarks or use AI generated content detection tools. A basic thing that can be done by everyone however, is to always verify the source of any suspicious image, video or voice message. If you receive a video that seems out of character for the person depicted, cross-check with other sources and contact the person directly. For instance, a deepfake video of a politician making inflammatory statements should be verified through official channels. A video of a relative being kidnapped or in distress should be verified by calling the person or a mutual connection. Similarly, a voice call from someone claiming to be a senior executive or a bank representative asking for sensitive information, can be verified by calling back on a known and trusted number like a bank’s official customer service number to confirm.

Limit sharing personal information. Fraudsters often use details from social media to create convincing deepfakes and voice messages. Avoid posting sensitive information like your address, phone number, and financial details. Adjust the privacy settings on your social media accounts to limit the visibility of your posts and personal information. Only allow trusted friends and family to view your profile and posts. On Facebook or Instagram, you can review and adjust your privacy settings to ensure that only your friends can see your posts. Avoid sharing details like your vacation plans, which could be used by fraudsters to target you while you're away. Keep your profile and friends list private so that fraudsters don’t target your family and friends with your fake videos and voice calls. Limiting the amount of personal information available online makes it more difficult for fraudsters to gather data about you. This reduces the likelihood that they can create convincing deepfakes or impersonate you.

As we stand on the brink of an AI-driven era, the battle between innovation and exploitation intensifies. The rise of AI-driven fraud is not just a challenge—it's a call to action. With generative AI capable of creating convincing deepfakes, realistic voice clones, and sophisticated phishing schemes, the potential for deception has never been greater. Yet, amidst these threats lies an opportunity: the chance to redefine our approach to digital security and trust. It's not enough to be aware; we must be proactive, leveraging the same cutting-edge technology to develop advanced detection systems and public education campaigns. The future of AI is not predetermined. By taking decisive action today, we can shape a digital landscape where the promise of generative AI is realized without falling prey to its perils. Let this be our moment to rise, innovate, and protect, ensuring a safer digital world for all.

如有侵权请联系:admin#unsafe.sh