2024-10-25 02:50:14 Author: securityboulevard.com(查看原文) 阅读量:3 收藏

Introduction

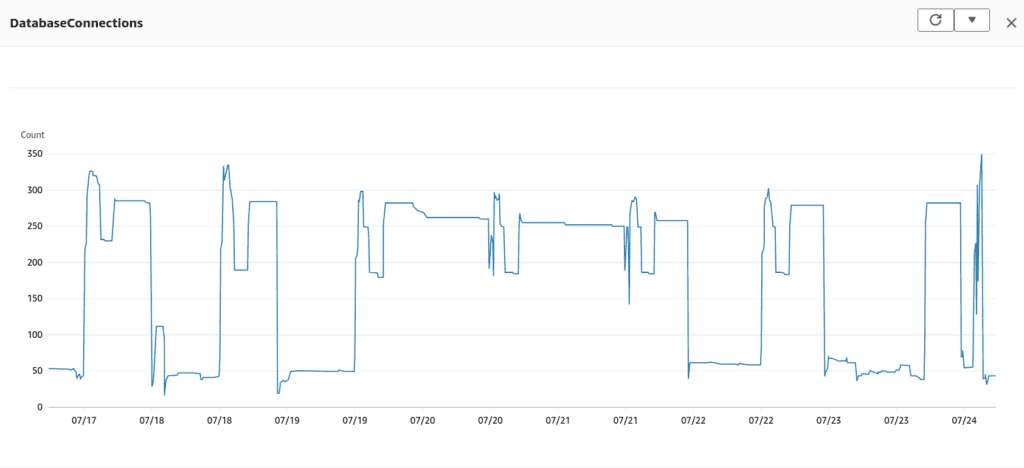

In a recent migration from a standard RDS DB instance to Aurora RDS PostgreSQL Serverless V2, we encountered an unexpected issue. A significant and unexplained increase in database connections. This anomaly led to spikes in resource utilization, specifically memory, and caused a noticeable rise in operational costs. After a thorough investigation, we identified RDS Proxy as the root cause.

This blog details our troubleshooting process, the steps we took to investigate the issue, and how we ultimately resolved it.

Problem Overview

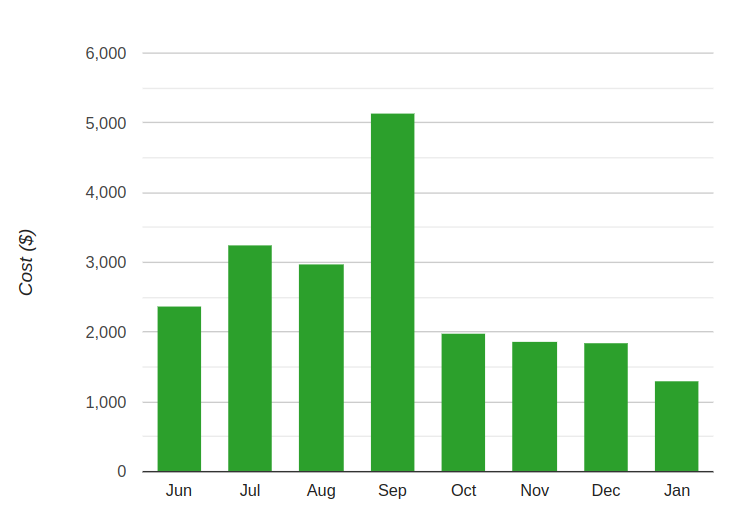

Shortly after migrating to Aurora RDS PostgreSQL Serverless V2, we observed memory utilization spikes that were not aligned with our application’s transaction load, query execution, or routine maintenance operations like autovacuum. Despite database optimizations, the issue persisted, and our RDS costs continued to escalate.

Initial Troubleshooting

Our first step was to optimize the database to rule out common causes of increased memory usage:

-

- VACUUM Operations: We executed analyze & vacuum commands on the database to clear up any dead tuples that might be inflating memory usage.

ANALYZEVACUUM

- VACUUM Operations: We executed analyze & vacuum commands on the database to clear up any dead tuples that might be inflating memory usage.

-

- Index Optimization: We identified and addressed bloated indexes, reducing their size and improving query performance using

pg_repacka postgresql extension.pg_repack -h rds-forward.proxy-xxxxxxxxx.ap-southeast-1.rds.amazonaws.com -U db_user -d db_name -t schema.table_name -k

- Index Optimization: We identified and addressed bloated indexes, reducing their size and improving query performance using

Despite these efforts, the memory spike persisted, and our RDS costs were soaring like a rocket.

Investigation Process

To pinpoint the issue, we analyzed RDS metrics over a period of several weeks. We noticed an unusually high number of database connections during periods when the application load was low. This observation led us to focus on the following areas:

-

- RDS Proxy Logs: We reviewed the logs generated by RDS Proxy to understand the connection patterns.

-

- Database Maximum Connections: We examined the maximum connection settings on the database to ensure they were within expected limits.

-

- RDS Proxy Connection Pool Settings: We looked into how RDS Proxy was managing its connection pool, particularly the maximum connections allowed and how idle connections were being handled.

Our database thought it was at a never-ending party, with connections multiplying like confetti. Time to turn down the music and clean up this digital disco.

Root Cause Analysis

The investigation revealed that RDS Proxy was at the heart of the issue. We found that RDS Proxy was maintaining a large number of idle connections, which were being reused inefficiently. These connections were only timing out after the default 24-hour period, which led to excessive resource consumption.

Resolution

We identified that the issue stemmed from the MaxIdleConnectionsPercent parameter in AWS RDS Proxy. This parameter wasn’t readily accessible through the console, so we used the AWS CLI to make the necessary adjustments.

Here’s how we tweaked the parameters according to our workloads

First, we listed out the rds proxy configuration using the below aws cli command:

aws rds describe-db-proxy-target-groups --db-proxy-name dsr-forward --region ap-southeast-1output:

{

"TargetGroups": [

{

"DBProxyName": "dsr-forward",

"TargetGroupName": "default",

"TargetGroupArn": "arn:aws:rds:ap-southeast-1:863547483732:target-group:prx-tg-0a926g7b3ddyg8j0f",

"IsDefault": true,

"Status": "available",

"ConnectionPoolConfig": {

"MaxConnectionsPercent": 100,

"MaxIdleConnectionsPercent": 50,

"ConnectionBorrowTimeout": 120,

"SessionPinningFilters": []

},

"CreatedDate": "2021-10-25T12:07:51.836000+00:00",

"UpdatedDate": "2023-06-24T09:02:25.222000+00:00"

}

]

}AWS CLI Reference – Here

Here’s what each parameter generally means:

-

- MaxConnectionsPercent: The maximum size of the connection pool for each target in a target group. The value is expressed as a percentage of the

max_connections. Default value is100for postgresql engine.

- MaxConnectionsPercent: The maximum size of the connection pool for each target in a target group. The value is expressed as a percentage of the

-

- MaxIdleConnectionsPercent: A value that controls how actively the proxy closes idle database connections in the connection pool. The value is expressed as a percentage of the

max_connections. With a high value, the proxy leaves a high percentage of idle database connections open. A low value causes the proxy to close more idle connections and return them to the database.If you specify this parameter, then you must also include a value forMaxConnectionsPercent. The default value is half of the value ofMaxConnectionsPercent.

- MaxIdleConnectionsPercent: A value that controls how actively the proxy closes idle database connections in the connection pool. The value is expressed as a percentage of the

Example-1:

You have a database that can handle up to 500 connections at a time. To find out how many of those connections can be idle (not in use) at any given time, you use a percentage.

Here’s how you calculate it:

Formula:

MaxIdleConnections = (MaxIdleConnectionsPercent / 100) × MaxConnectionsInput:

MaxIdleConnectionsPercent: 50%So, if your database allows 500 connections in total, and you set the MaxIdleConnectionsPercent to 50%, then the number of idle connections allowed would be:

Max Idle Connections = (50 / 100) × 500 = 250This means out of 500 connections, 250 can be idle.

Example-2:

You have a database that allows 2000 connections at a time and MaxIdleConnectionsPercent is 10%.

Now, using the formula:

Max Idle Connections = (10 / 100) × 2000 = 200This means out of 2000 connections, 200 can be idle.

Adjusting our workloads

We fine-tuned the MaxConnectionsPercent and MaxIdleConnectionsPercent parameters in AWS RDS Proxy to better suit our needs.

Here’s how we updated them:

Input:

aws rds modify-db-proxy-target-group \

--target-group-name default \

--db-proxy-name dsr-forward \

--region ap-southeast-1 \

--connection-pool-config MaxConnectionsPercent=50,MaxIdleConnectionsPercent=10output:

{

"DBProxyTargetGroup": {

"DBProxyName": "dsr-forward",

"TargetGroupName": "default",

"TargetGroupArn": "arn:aws:rds:ap-southeast-1:863547483732:target-group:prx-tg-0a926g7b3ddyg8j0f",

"IsDefault": true,

"Status": "available",

"ConnectionPoolConfig": {

"MaxConnectionsPercent": 50,

"MaxIdleConnectionsPercent": 10,

"ConnectionBorrowTimeout": 120,

"SessionPinningFilters": []

},

"CreatedDate": "2021-10-25T12:07:51.836000+00:00",

"UpdatedDate": "2023-09-14T10:37:09.421000+00:00"

}

}By setting this parameter to a minimal value, we restored balance to our database environment, and everything began running smoothly once again. Additionally, we saw RDS Serverless costs decrease by 50% due to significantly lower ACU consumption, as there were fewer active connections.

And so, our database party came to an end, leaving us with a valuable lesson: keep an eye on those resources, or you might find yourself footing the bill for a tech-tacular blowout.

Our journey dealing with high connections in Aurora Serverless V2 was both challenging and rewarding. We learned the crucial importance of meticulous monitoring and analysis, and the value of keeping up with the latest features and parameters. By sharing our experience, we aim to help other engineers avoid similar pitfalls and optimize their Aurora Serverless deployments.

如有侵权请联系:admin#unsafe.sh