2024-11-7 06:29:17 Author: securityboulevard.com(查看原文) 阅读量:5 收藏

In our previous article, we demonstrated how sensitive information (PII/PHI) can be retrieved from vector embeddings in Retrieval-Augmented Generation (RAG) systems. We showed that text embeddings containing sensitive data can expose personally identifiable information with alarming accuracy. In a follow-up, we outlined how to create de-identified embeddings with Tonic Textual and Pinecone as a method to safeguard against this risk.

In this article, we take the investigation further to address a critical question: How does de-identifying text before embedding impact retrieval performance? The answer, perhaps surprisingly, is that de-identifying your data prior to embedding has virtually no effect on retrieval quality, showing less than a 1% change in mean retrieval ranking on our test dataset.

Even more promising, that minor variance appears only with public datasets containing information about known public figures—data that an LLM may already recognize. For private data (e.g., user information behind a company firewall), the impact of de-identification on retrieval quality is even less significant.

Curious to see the details? Read on for our experimental findings, and try it yourself with our code in this Colab notebook.

Processing the dataset

To test retrieval performance we’ll use the TREC-news dataset. Each entry in the dataset contains a query along with the correct text chunk that should be retrieved to answer the query. Below is the first entry in their dataset.

Data sampling, de-identifying, and embedding text chunks

We randomly sampled 1000 queries and corresponding text chunks from TREC-news.

We copied these text chunks and used Tonic Textual to de-identify sensitive data to have two groups of text chunks: one group with sensitive data (original) and one group with sensitive data redacted.

We then embedded both groups of text chunks to create two groups of embeddings.

Upserting and querying embedding vectors in PineconeDB

Using two sets of embeddings—one created from the original text chunks and the other from redacted text chunks—we upserted each set into separate indices in PineconeDB. For each query from a random sample of TREC-news, we retrieved the top 10 embeddings from both indices, recording the position of the correct chunk in each set.

Metrics for analysis

We used three metrics: Normalized Discounted Cumulative Gain, Mean Retrieval Ranking, and Recall @ k—three standard metrics to evaluate how effective a ranking of results is.

- Normalized Discounted Cumulative Gain compares the ranking of all the relevant chunks with the ideal ranking (#1), discounting the score logarithmically as the relevant chunk’s rank goes further down the list.

- Mean Retrieval Ranking is computed by taking the reciprocal of the order of the relevant chunk. If the relevant chunk is in the 5th spot, the MRR would be ⅕. If no relevant chunks are retrieved in the top k, the MRR is 0.

- Recall @ k’s calculation is simple: if the relevant chunk is in the top k texts, the score is 1 (since there is only 1 relevant chunk). Otherwise, the score is 0. This is averaged amongst all 1000 queries.

All three metrics were computed with k = 10 (if the relevant chunk is not in the top 10 chunks retrieved, it contributes zero to the score).

Here is our metrics code:

Redaction settings

We tested two redaction settings: “aggressive” where every possible setting is redacted, and “normal” where only standard sensitive data like last names, emails, addresses, etc. are redacted.

| Aggressive | Normal | |

| First Name | ✅ | |

| Last Name | ✅ | ✅ |

| ✅ | ✅ | |

| Address | ✅ | ✅ |

| City | ✅ | |

| State | ✅ | |

| Zip Code | ✅ | ✅ |

| Phone Number | ✅ | ✅ |

| Credit Card Number | ✅ | ✅ |

| Credit Card Exp. Date | ✅ | ✅ |

| Credit Card CVV | ✅ | ✅ |

| Occupation | ✅ | |

| Organization | ✅ |

Results

Naive RAG vs Normal De-Identified RAG

| Normal TREC-news | TREC-news De-Identified w/ Tonic Textual (Normal Settings) | Performance drop | |

| NCDG @ k=10 | 0.866 | 0.853 | 0.013 |

| MRR @ k=10 | 0.463 | 0.452 | 0.011 |

| Recall @ k=10 | 0.987 | 0.975 | 0.012 |

Naive RAG vs Aggressive De-Identified RAG

| Normal TREC-news | TREC-news De-Identified w/ Tonic Textual (Normal Settings) | Performance drop | |

| NCDG @ k=10 | 0.863 | 0.805 | 0.058 |

| MRR @ k=10 | 0.460 | 0.413 | 0.047 |

| Recall @ k=10 | 0.981 | 0.888 | 0.093 |

Naive RAG vs Aggressive Re-Synthesized RAG

| Normal TREC-news | TREC-news De-Identified w/ Tonic Textual (Normal Settings) | Performance drop | |

| NCDG @ k=10 | 0.860 | 0.794 | 0.066 |

| MRR @ k=10 | 0.457 | 0.403 | 0.054 |

| Recall @ k=10 | 0.980 | 0.847 | 0.133 |

When de-identifying sensitive data, retrieval performance only drops by 1% with Normalized Cumulative Discounted Gain, Mean Retrieval Ranking, and Recall metrics. Even under the most aggressive redaction setting (redacting everything), retrieval performance is only altered by single digit percentages on all metrics.

Private vs public embeddings

Redactions may have an even smaller impact on RAG systems for company data than they do in our benchmarks. Why? Large language models are heavily trained on public data from the internet, so redacting private entities in internal company documents may result in less distortion than redacting well-known public entities.

For instance, redacting “Albert Einstein was a scientist” to “John Doe was a scientist” changes the meaning and embedding more significantly than redacting “Mehul is a data science intern” to “John Doe is a data science intern.” The former involves a widely known public figure, likely recognized by the LLM, whereas the latter is more typical of internal data.

To test this, we compiled a random subset of public data from AllenAI’s C4 dataset (a cleaned version of Common Crawl), redacted it, and calculated embeddings for both the original and redacted data, measuring the average change between them. We then applied the same process to private data from proprietary audio transcripts.

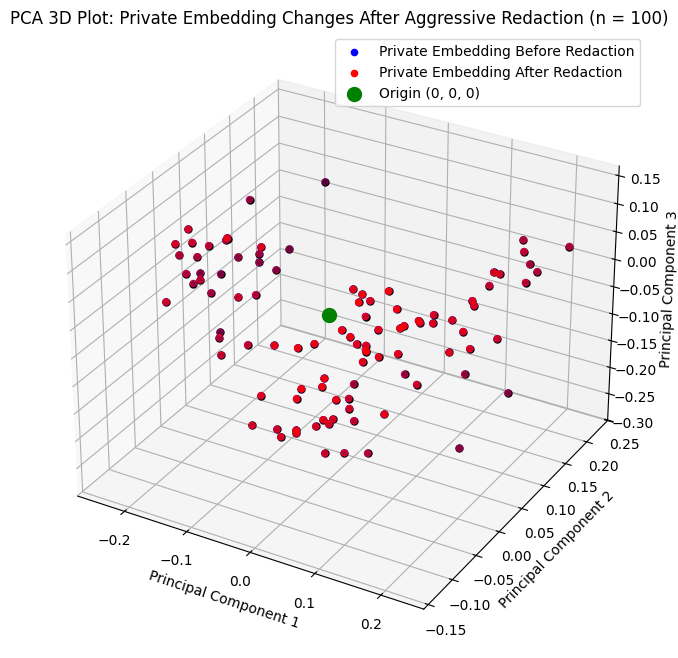

Visualizing embeddings “moving”

To showcase this, we performed dimensionality reduction via Principal Component Analysis (PCA) to visualize these high dimensional embeddings in the 3D space. We concatenated embeddings for both groups of public texts (before and after redactions), performed PCA, and randomly sampled 100 of these embedding pairs to visualize their “movement.” We did the same process for private texts. The blue dots represent embeddings before redactions and the red dots represent embeddings after redactions.

These visualizations show that the public embeddings moved more than private embeddings, giving credence to our hypothesis that redactions on private data will change your RAG application’s performance to an even lesser degree.

What are the implications here? Under the hood, the retrieval part of RAG finds chunks that are the most similar to the prompt via finding the closest chunk embeddings of the prompt embedding. So, a lower change in embeddings results in a less perturbed RAG system and ultimately a system capable of delivering high quality answers.

Conclusion

Our findings show that de-identifying data in Retrieval-Augmented Generation (RAG) workflows has a minimal impact on retrieval performance. Across our benchmarks we saw drops of less than 1% with standard redaction settings, and even with aggressive de-identification, performance metrics remained well within operational limits. This outcome holds particularly true for internal, proprietary data, where the impact of redactions was even less significant than for public data, as demonstrated by our embedding shift analysis.

In practice, these results affirm that companies can safeguard sensitive information in RAG systems through straightforward de-identification strategies without compromising performance. As language models continue to play a vital role in handling private data, de-identification offers a robust solution for protecting user privacy while delivering reliable retrieval accuracy.

To begin securely de-identifying your unstructured data for AI workflows, get a free trial of Tonic Textual today, or connect with our team to learn more.

如有侵权请联系:admin#unsafe.sh