2024-11-25 02:47:8 Author: hackernoon.com(查看原文) 阅读量:2 收藏

Authors:

(1) Xian Liu, Snap Inc., CUHK with Work done during an internship at Snap Inc.;

(2) Jian Ren, Snap Inc. with Corresponding author: [email protected];

(3) Aliaksandr Siarohin, Snap Inc.;

(4) Ivan Skorokhodov, Snap Inc.;

(5) Yanyu Li, Snap Inc.;

(6) Dahua Lin, CUHK;

(7) Xihui Liu, HKU;

(8) Ziwei Liu, NTU;

(9) Sergey Tulyakov, Snap Inc.

Table of Links

3 Our Approach and 3.1 Preliminaries and Problem Setting

3.2 Latent Structural Diffusion Model

A Appendix and A.1 Additional Quantitative Results

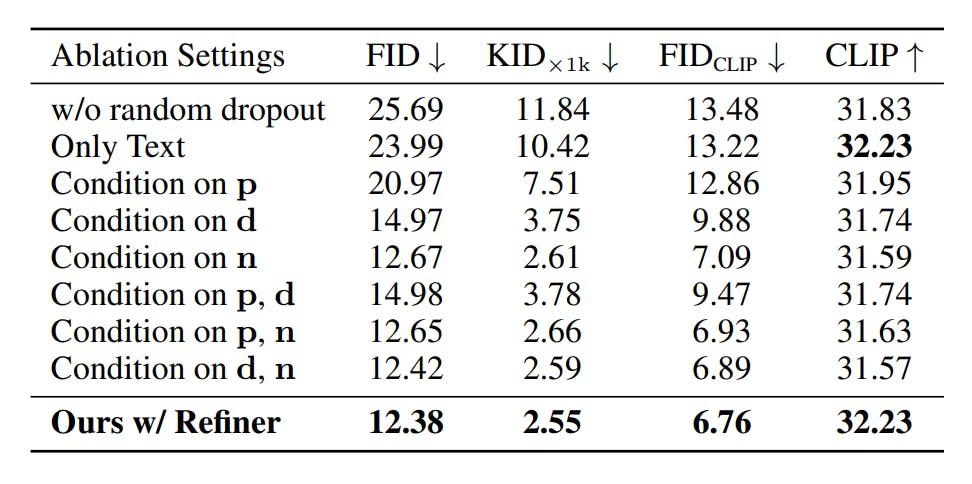

A.2 More Implementation Details and A.3 More Ablation Study Results

A.5 Impact of Random Seed and Model Robustness and A.6 Boarder Impact and Ethical Consideration

A.7 More Comparison Results and A.8 Additional Qualitative Results

A.4 MORE USER STUDY DETAILS

The study involves 25 participants and annotates for a total of 8236 images in the zero-shot MSCOCO 2014 validation human subset. They take 2-3 days to complete all the user study task, with a final review to examine the validity of human preference. Specifically, we conduct side-by-side comparisons between our generated results and each baseline model’s results. The asking question is “Considering both the image aesthetics and text-image alignment, which image is better? Prompt: .” The labelers are unaware of which image corresponds to which baseline, i.e., the place of two compared images are shuffled to achieve fair comparison without bias.

We note that all the labelers are well-trained for such text-to-image generation comparison tasks, who have passed the examination on a test set and have experience in this kind of comparisons for over 50 times. Below, we include the user study rating details for our method vs. baseline models. Each labeler can click on four options: a) The left image is better, in this case the corresponding model will get +1 grade. b) The right image is better. c) NSFW, which means the prompt/image contain NSFW contents, in this case both models will get 0 grade. d) Hard Case, where the labelers find it hard to tell which one’s image quality is better, in this case both models will get +0.5 grade. The detailed comparison statistics are shown in Table 8, where we report the grades of HyperHuman vs. baseline methods. It can be clearly seen that our proposed framework is superior than all the existing models, with better image quality, realism, aesthetics, and text-image alignment.

如有侵权请联系:admin#unsafe.sh