“As a rule, software systems do not work well until they have been used, and have failed repeatedly, in real applications.” Dr. Dave Parnas, Carnegie Mellon Prof. of Electrical Engineering

Historically, developers have used a number of approaches to create test data when they didn’t have or were unable to use production data in their lower environments.

Below, we’ll discuss five of the most common methods used to date to generate data and how they stack up for use in testing and development.

Dummy Data

“Dummy data,” as the name suggests, acts as a placeholder for real data during development and testing. Using dummy data ensures that all of the data fields in a database are occupied, so that a program doesn’t return an error when querying the database.

Dummy data is usually nothing more than data or records generated on the fly by developers in a hurry. However, dummy data can also refer to more carefully designed tests, e.g. in order to produce a given response or test a particular software feature.

This is not ideal for large scale testing because dummy data can lead to unintended impacts and bugs unless it has been thoroughly evaluated and documented.

Mock Data

Mock data is similar to dummy data, but generated more consistently, usually by an automated system, and on a larger scale. The purpose of mock data is to simulate real-world data in a controlled manner, often in uncommon ways. For example, mock data can be generated for database fields corresponding to a person’s first and last names, testing what happens for inputs such as:

- Very long or very short strings

- Strings with accented or special characters

- Blank strings

- Strings with numerical values

- Strings with reserved words (e.g. “NULL”)

Mock data can be useful throughout the software development process, from coding to testing and quality assurance. In most cases, mock data is generated in-house using various rules, scripts, and/or open-source data generation libraries.

The more complex the software and production data, the harder it is to mock data that retains referential integrity across tables.

Anonymized (De-identified) Data

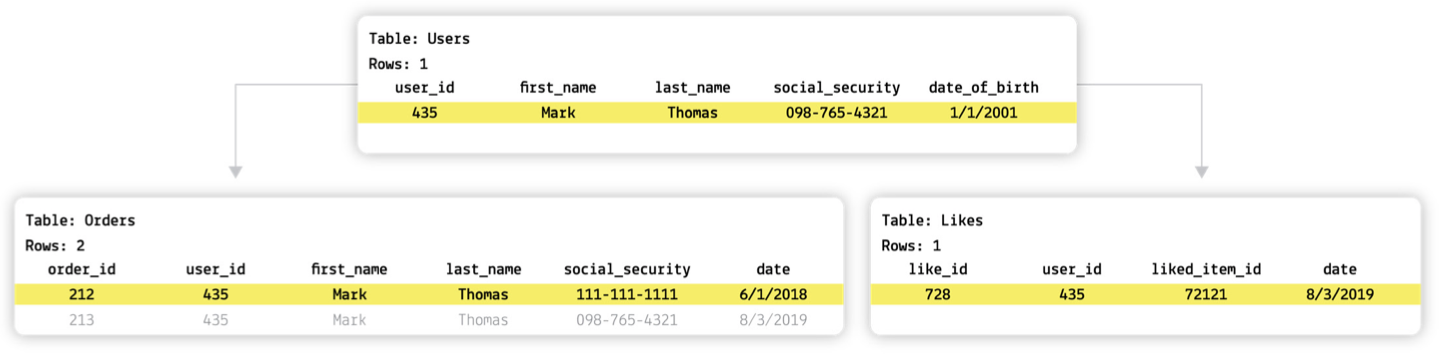

Real data that has been altered or masked is called “anonymized” or “de-identified.” The purpose is to retain the character of the real dataset while allowing you to use it without exposing PII or PHI.

More specifically, anonymization involves replacing real data with altered content via one-to-one data transformation. This new content often preserves the utility of the original data (e.g. real names are replaced with fictitious names), but it can also simply scramble or null out the original data with random characters.

Data anonymization can be performed in-house but it places an immense responsibility on the in-house development team to ensure that it’s done safely. Increasingly, companies are turning to third-party vendors to provide proven software solutions that offer privacy guarantees.

Subsetted Data

Subsetting is the act of creating a smaller, referentially-intact version of a dataset by:

- pulling a percentage of that dataset, or

- limiting it through a “select where” clause to target individual records as needed.

For example: give me 5% of all transactions or pull all data associated with customers who live in California.

Subsetting allows for creating a dataset sized specifically to your needs, environments, and simulations without unnecessary data and often constructed in order to target specific bugs or use cases. Using data subsetting also helps reduce the database’s footprint, improving speed during development and testing.

Data subsetting must be handled with care in order to form a consistent, coherent subset that is representative of the larger dataset without becoming unwieldy. Given the growing complexity of today’s ecosystems, this is fast becoming an impressive challenge. At the same time, demand for subsetting is increasing with the growing shift toward microservices and the need for developers to have data that fits on their laptops to enable work in their local environments.

On its own, subsetting does not protect the data contained in the subset; it simply minimizes the amount of data at risk of exposure.

Data bursts

Automated production of generated data in large batches are called data bursts. This is most useful when you care less about data itself, and more about the volume and velocity of data as it passes through the software. This is particularly useful in simulating traffic spikes or user onboarding campaigns.

For example, data bursts can be used in the following testing and QA scenarios:

- Performance testing: Testing various aspects of a system’s performance, e.g. its speed, reliability, scalability, response time, stability, and resource usage (including CPU, memory, and storage).

- Load testing: Stress testing a system by seeing how its performance changes under increasingly heavy levels of load (e.g. increasing the number of simultaneous users or requests).

- High availability testing: Databases should always be tested to handle bursts in incoming requests during high-traffic scenarios, but testing for high-data scenarios is also important. Preparing for data burst scenarios helps ensure that queries continue to run optimally with zero impact on the application performance and user experience.

This blog is an excerpt from our Tonic 101 eBook. If you’re interested in reading more, download your copy today!

*** This is a Security Bloggers Network syndicated blog from Expert Insights on Synthetic Data from the Tonic.ai Blog authored by Expert Insights on Synthetic Data from the Tonic.ai Blog. Read the original post at: https://www.tonic.ai/blog/5-traditional-approaches-to-generating-test-data