2024-11-28 06:11:26 Author: hackernoon.com(查看原文) 阅读量:1 收藏

Table of Links

2 Background & Problem Statement

2.1 How can we use MLLMs for Diffusion Synthesis that Synergizes both sides?

3.1 End-to-End Interleaved generative Pretraining (I-GPT)

4 Experiments and 4.1 Multimodal Comprehension

4.2 Text-Conditional Image Synthesis

4.3 Multimodal Joint Creation & Comprehension

5 Discussions

5.1 Synergy between creation & Comprehension?

5. 2 What is learned by DreamLLM?

B Additional Qualitative Examples

E Limitations, Failure Cases & Future Works

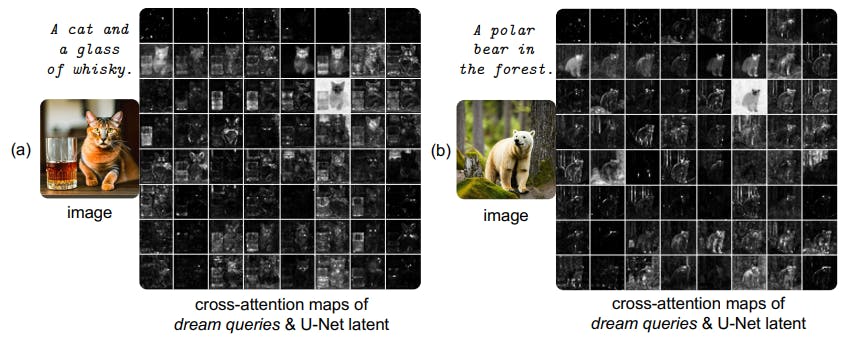

5.2 WHAT IS LEARNED BY DREAMLLM?

Dream Query Attention In DREAMLLM, the conditional embedding is derived from MLLMs with some learned dream queries. Fig. 6 demonstrates a visualization of the learned cross-attention mechanism between these queries and the diffusion latent. Similar to (Hertz et al., 2023), we visualize the attention map averaged across all timestamps. It is seen that: i) The query attention is structured, disentangled, and semantically-oriented.

This is evidenced by the fact that distinct queries adeptly capture different subject and background semantics. ii) Despite varying prompts, attention patterns exhibit remarkable similarity as shown in Fig. 6 (a) and (b). This contrasts with the token attentions from the original SD, which are typically text-token dependent. We postulate that this arises from the model’s causal nature, leading to a consistent semantic structure order.

Authors:

(1) Runpei Dong, Xi’an Jiaotong University and Internship at MEGVII;

(2) Chunrui Han, MEGVII Technology;

(3) Yuang Peng, Tsinghua University and Internship at MEGVII;

(4) Zekun Qi, Xi’an Jiaotong University and Internship at MEGVII;

(5) Zheng Ge, MEGVII Technology;

(6) Jinrong Yang, HUST and Internship at MEGVII;

(7) Liang Zhao, MEGVII Technology;

(8) Jianjian Sun, MEGVII Technology;

(9) Hongyu Zhou, MEGVII Technology;

(10) Haoran Wei, MEGVII Technology;

(11) Xiangwen Kong, MEGVII Technology;

(12) Xiangyu Zhang, MEGVII Technology and a Project leader;

(13) Kaisheng Ma, Tsinghua University and a Corresponding author;

(14) Li Yi, Tsinghua University, a Corresponding authors and Project leader.

如有侵权请联系:admin#unsafe.sh