In manufacturing, catching defects early in the assembly line ensures those defects don’t turn into failures in the consumer’s hands. Similarly, in the software industry, integrating security checks early in the delivery pipeline allows minimizing the cost of fixing security issues and ensure they do not reach production.

In the context of cloud environments, companies usually describe their infrastructure as code using tools like Terraform or CloudFormation. In this post, we review the landscape of tools that allow us to perform static analysis of Terraform code in order to identify cloud security issues and misconfigurations even before they pose an actual security risk.

Table of Contents

Introduction

In the cloud, misconfigurations will get you hacked well before zero-days do. According to a report released in 2020, the NSA asserts that misconfiguration of cloud resources is the most prevalent vulnerability in cloud environments. Looking at a few recent data breaches in AWS…

- The Capital One breach was caused by a firewall left inadvertently open to the Internet, along with an overprivileged EC2 instance role

- The Los Angeles Times website started mining cryptocurrency in your browser due to a world-writable S3 bucket

- The Magecart group backdoored Twilio’s SDK which was hosted on a world-writable S3 bucket

These are all high-profile hacks caused by misconfigurations that are easy to catch by looking at the associated (fictional) Terraform code:

resource "aws_security_group" "firewall_sg" {

name = "firewall_sg"

ingress {

description = "SSH"

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

}

resource "aws_s3_bucket" "static" {

bucket = "static.latimes.com"

acl = "public-write"

}

resource "aws_s3_bucket" "sdk" {

bucket = "media.twiliocdn.com"

policy = <<POLICY

{

"Sid": "AllowPublicRead",

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": [

"s3:GetObject",

"s3:PutObject"

]

}

POLICY

}

Evaluation of Terraform Static Analysis Tools

Evaluation criteria

Before we begin to evaluate the landscape of tools, let’s define what criteria and features are important to us.

Maturity and community adoption

Does the project have an active GitHub repository? How many maintainers does it have? Is it backed by a company? How confident are we that it won’t be abandoned in two weeks?

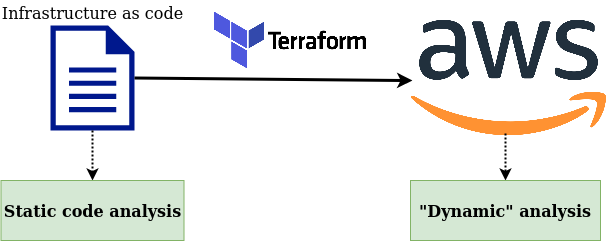

Type of scan

Static analysis tools for Terraform usually fall into one of two categories. They either scan HCL code directly, or scan the Terraform plan file.

Scanning the HCL code has the advantage of making the scan faster, stateless, and not requiring any communication with a backend API. Scanning the Terraform plan makes sure the scan runs after any interpolation, function call, or variable processing in the HCL code. On the other hand, it requires that we generate the plan before scanning, often assuming that an authenticated communication with the appropriate backend is available (e.g. the AWS API).

Typically, tools scanning the HCL code take no more than a few seconds to run and can be used without network connectivity. However, they have a good chance of missing security issues introduced by dynamically evaluated expressions. Take, for example:

variable "bucket_acl" {

default = "private"

}

resource "aws_s3_bucket" "bucket" {

bucket = "bucket.mycorp.tld"

acl = var.bucket_acl

}

It is not possible to determine whether there is a security flaw in this code just looking at it, you need additional runtime context which the Terraform plan will have access to.

$ terraform plan -var bucket_acl=public-read-write -out=plan.tfplan

$ terraform show -json plan.tfplan > plan.json

$ cat plan.json | jq '.planned_values.root_module["resources"][0].values'

{

"acl": "public-read-write",

"bucket": "bucket.mycorp.tld",

...

}

Although this may seem like a trivial example, lots of Terraform code use extensively input variables, dynamic data sources and complex HCL expressions that can easily become security issues you can’t easily catch just by looking at the source code.

These are very much the same considerations as in the comparison between SAST and DAST.

Support for custom checks

We want to be able to write custom checks that make sense given our needs and our environment. Most of the time, throwing a set of hundreds of default rules at our code is a great recipe for failure.

Complexity — How easy is it to write and test and custom checks? The easier it is, the higher the quality of our checks and the more maintainable they will be.

Expressiveness —How expressive is the language in which custom checks are written? Does it allow us to write complex checks requiring multi-resource visibility? For example, can we express the following common rules?

- “S3 buckets must have an associated S3 Public Access Block resource“

- “VPCs must have VPC flow logs enabled, i.e. an associated aws_flow_log resource“

- “EC2 instances in a subnet attached to an Internet Gateway must not have a public IP“

Usability and engineer experience

Our Terraform static analysis solution will be used by engineers. How easy and enjoyable is it for them to use and tune?

Support for suppressing checks — Static analysis often comes at the expense of false positives or benign true positives that shouldn’t pollute the scan output. For instance, if you scan your Terraform code for exposed S3 buckets, you will catch this publicly readable S3 bucket your web design team uses to host your company’s website static assets. Some tools give us the possibility to add annotations to Terraform resources, directly in the HCL code, to indicate if specific checks should be skipped for individual resources. This is great in that it allows to empower engineers to act on benign true positives without requiring us to change the checks.

Readability of scan results — How readable is the output of a scan? Does it show the resources that are failing security checks? Is it actionable? Does it support several output formats? (human-readable, JSON…)

Comparison

In this post, we evaluate some candidates who seem to be the most widely used and best known. The following table summarizes how these tools meet the criteria discussed above. Note that I purposely did not include non-Terraform specific tools such as InSpec or Open Policy Agent. Although these tools can technically run against any JSON file (including a Terraform plan), they were not specifically built with infrastructure as code in mind and require more effort to use. Same goes for Semgrep, although it admittedly can be used to scan HCL code.

In addition to this table, here’s an example of a very simple custom check to identify unencrypted S3 buckets implemented with each tool’s way of defining custom checks.

# Run using:

# checkov -d tfdir --external-checks-dir /path/to/custom/checks -c CUSTOM_S3_ENCRYPTED'

# /path/to/custom/checks/__init__.py must contain the code described in https://www.checkov.io/2.Concepts/Custom%20Policies.html

from checkov.common.models.enums import CheckResult, CheckCategories

from checkov.terraform.checks.resource.base_resource_check import BaseResourceCheck

class UnencryptedS3Bucket(BaseResourceCheck):

def __init__(self):

name = "Ensure S3 bucket is encrypted at rest"

id = "CUSTOM_S3_ENCRYPTED"

supported_resources = ['aws_s3_bucket']

categories = [CheckCategories.ENCRYPTION]

super().__init__(name=name, id=id, categories=categories, supported_resources=supported_resources)

def scan_resource_conf(self, conf):

if 'server_side_encryption_configuration' not in conf.keys():

return CheckResult.FAILED

return CheckResult.PASSED

scanner = UnencryptedS3Bucket()

# Run using:

#

# terraform plan -out=plan.tfplan && terraform show -json plan.tfplan > plan.json

# opa eval -d /path/to/regula/lib/ -d ./path/to/regula-rules --input plan.json 'data.fugue.regula.report'

#

# Where /path/to/regula is a clone of https://github.com/fugue/regula and /path/to/regula-rules is a directory without your rego rules

# Can also be run using conftest

package rules.s3_bucket_encryption

resource_type = "aws_s3_bucket"

deny[msg] {

count(input.server_side_encryption_configuration) == 0

msg = "Missing S3 bucket encryption"

}

# Run using: # # terraform plan -out=plan.tfplan && terraform show -json plan.tfplan > plan.json # terraform-compliance -f /path/to/rules -p plan.json Given I have AWS S3 Bucket defined Then it must contain server_side_encryption_configuration

# Run using:

#

# terrascan scan --policy-path /path/to/custom/checks

#

# See example at https://github.com/accurics/terrascan/tree/master/pkg/policies/opa/rego/aws/aws_s3_bucket for file structure

# In particular, each checks needs its associated JSON metadata file

package terrascan

# Stolen from https://github.com/accurics/terrascan/blob/master/pkg/policies/opa/rego/aws/aws_s3_bucket/noS3BucketSseRules.rego

noS3BucketSseRules[retVal] {

bucket := input.aws_s3_bucket[_]

bucket.config.server_side_encryption_configuration == []

# Base-64 encoded value of the expected value for server_side_encryption_configuration block

rc = "ewogICJzZXJ2ZXJfc2lkZV9lbmNyeXB0aW9uX2NvbmZpZ3VyYXRpb24iOiB7CiAgICAicnVsZSI6IHsKICAgICAgImFwcGx5X3NlcnZlcl9zaWRlX2VuY3J5cHRpb25fYnlfZGVmYXVsdCI6IHsKICAgICAgICAic3NlX2FsZ29yaXRobSI6ICJBRVMyNTYiCiAgICAgIH0KICAgIH0KICB9Cn0="

traverse = ""

retVal := { "Id": bucket.id, "ReplaceType": "add", "CodeType": "block", "Traverse": traverse, "Attribute": "server_side_encryption_configuration", "AttributeDataType": "base64", "Expected": rc, "Actual": null }

}

# Run using:

#

# tfsec --custom-check-dir /path/to/rules

---

checks:

- code: CUSTOM001

description: Custom check to ensure S3 buckets are encrypted

requiredTypes:

- resource

requiredLabels:

- aws_s3_bucket

severity: ERROR

matchSpec:

name: server_side_encryption_configuration

action: isPresent

errorMessage: The S3 bucket must be encrypted

As often when comparing different technologies, we do not have a clear winner. You should go for the tool that makes most sense for you given your available skills and requirements.

If you need something running as quickly as possible, I recommend checkov or tfsec. Checkov also has the very nice property of supporting scans of both HCL code and Terraform plan files, while tfsec is blazing fast — on my laptop, it takes 30 milliseconds to scan the whole Terragoat project. If the ability to correlate multiple resources together is a must, go for terrascan or regula! (Or upvote #155 for checkov adding support.) terraform-compliance has a very nice to read behavior-driven development language that could also be a determining factor for you.

Note that some of these tools have an (optional) complementary commercial offering such as BridgeCrew Cloud for Checkov, Accurics for Terrascan, and Fugue for Regula. However, these offerings don’t always relate directly to Terraform static analysis and some focus on scanning for security issues resources that are already deployed in a cloud provider (what Gartner calls a CSPM, short for Cloud Security Posture Management). Bridgecrew Cloud does add some features directly related to Terraform scanning, though, such as additional integrations and automation capabilities.

Finally, they are all easy to install and integrate in a CI pipeline. Taking us to the next section!

Designing a Terraform Static Analysis Workflow

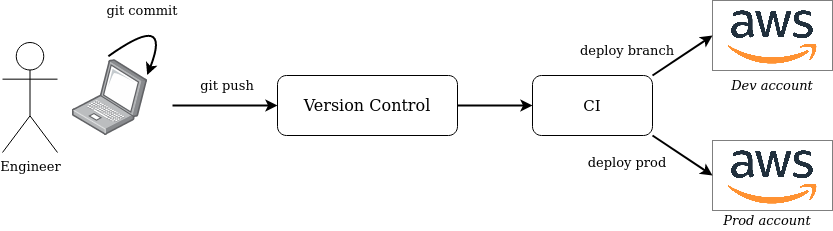

Suppose we have a fairly standard and basic infrastructure as code deployment workflow:

- Engineers use Git and commit their work locally.

- Then they push it to a branch in a centralized version control system (VCS) such as Github or BitBucket.

- A pipeline deploys (automatically or not) the infrastructure described in their branch into a development environment, such as a test AWS account (e.g. using Jenkins or Gitlab CI).

- When the engineer is satisfied with their changes, they merge their code to the main branch.

- To deploy in production, a pipeline deploys the infrastructure described in the main VCS branch to the production environment.

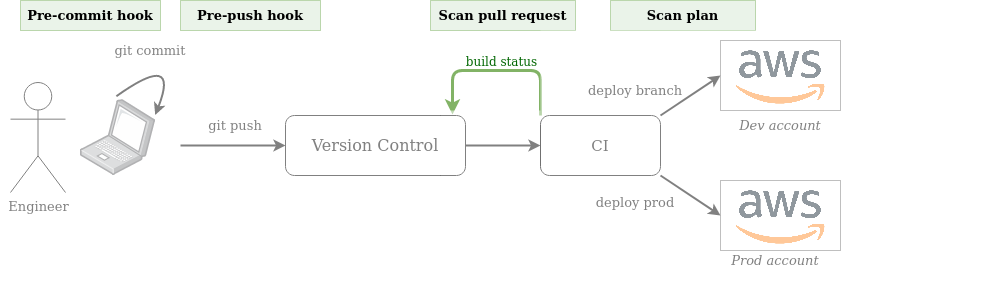

Where can we integrate into this pipeline? Everywhere!

First, we allow engineers to install a pre-commit and/or pre-push hook (manually or using pre-commit) so that their code is transparently scanned before every commit and/or push. This creates a very short feedback loop even before the code reaches VCS. As we want the scan to be as fast as possible, we prefer scanning the HCL code here, not the Terraform plan. Example pre-commit hook with tfsec:

Then, we can run a CI job on every pull request posting back the scan results as a PR comment / PR build check. For example, we can implement this with a GitHub action, or directly within a GitLab CI pipeline that runs against merge requests.

Finally, before deploying to AWS (both to development or production), if our static analysis tool supports it, we generate our Terraform plan and scan it.

# Generate plan $ terraform plan -out=plan.tfplan $ terraform show -json plan.tfplan >plan.json $ # Scan plan.json here # Deploy $ terraform apply plan.tfplan

Note that it makes sense to run the scan for each environment as Terraform resources are likely configured differently between environments (e.g. from environment variables or input variables)

Integrating at these stages gives us the nice property of empowering engineers to get blazing fast feedback if they want to while ensuring that no vulnerable Terraform code is deployed to AWS.

Additional Considerations

Custom Checks Have Bugs Too!

As security engineers, we are responsible to ensure the checks we write do not generate noise and limit false positives. To help with this, I find it very useful to treat rules like software and therefore have them under version control, along with a generous set of unit tests.

Implementation will depend on the tool you choose. If you use checkov, for instance, custom checks are implemented in Python:

class EC2SecurityGroupOpenFromAnywhere(BaseResourceCheck):

def __init__(self):

name = "Ensure security groups are not open to any IP address"

id = "CUSTOM1"

supported_resources = ['aws_security_group']

categories = [CheckCategories.NETWORKING]

super().__init__(name=name, id=id, categories=categories, supported_resources=supported_resources)

def scan_resource_conf(self, conf):

ingress_rules = conf.get('ingress', [])

# No ingress rule

if len(ingress_rules) == 0 or len(ingress_rules[0]) == 0:

return CheckResult.PASSED

for ingress_rule in ingress_rules:

allowed_cidrs = ingress_rule.get('cidr_blocks', [])

if len(allowed_cidrs) > 0:

allowed_cidrs = allowed_cidrs[0]

for allowed_cidr in allowed_cidrs:

if allowed_cidr in ["0.0.0.0/0", "::/0"]:

return CheckResult.FAILED

return CheckResult.PASSED

This allows you to easily write associated unit tests using PyTest/UnitTest checking that good inputs pass the checks and bad ones don’t. If you use Regula, you can use Fregot to write tests in the same language (Rego) as the checks.

Select High-Value Checks

Some tools ship with hundreds of default checks. Although this is highly valuable to save time in writing your own checks, it also has the potential to generate a lot of noise. For instance, while “CKV_AWS_50: X-ray tracing is enabled for Lambda” or “CKV_AWS_18: “Ensure the S3 bucket has access logging enabled” is likely good advice, it’s arguable whether they should break a build. Focus on high-value checks such as identification of publicly exposed resources, over-privileged IAM policies, and unencrypted resources.

Centralize Scanning Logic

If you are running enough infrastructure as code, it’s hopefully split into multiple modules and repositories. This implies the need to integrate the static analysis into several pipelines and repositories. As a result, it makes sense to centralize the scanning logic into a dedicated repository that all modules can reuse. This way, you can easily update it when necessary, such as changing the list of enabled checks or the commands needed to perform a scan.

Regularly Scan Your Code for Checks Suppression Annotations

As mentionned in our initial evaluation criteria, some tools allow us to mark Terraform resources with an inline comment so that specific checks don’t apply to them. For instance, with checkov:

resource "aws_s3_bucket" "bucket" {

#checkov:skip=CKV_AWS_20:This bucket is intentionally publicly readable as it serves static assets

bucket = "static.mycorp.com"

acl = "public-read"

}

Although suppression features are useful in such cases, the presence of too many suppression comments often indicates low quality or inadequate rules that generate false positives. It is therefore useful to regularly search for suppression comments in VCS to see if we can improve our rules.

Limitations and Complementary Approaches

Scanning Terraform code or plans is a great way to catch security issues early. That being said, it’s not perfect. It is possible that your cloud infrastructure is not fully deployed using Terraform. Even if it is, people using the AWS Console or AWS CLI directly might create configuration drifts causing the reality to differ from what your Terraform code describes.

A complementary approach is to regularly scan for security issues the resources already running in your cloud environment. Just as when comparing SAST and DAST for traditional software, this approach is slower but offers better coverage and visibility. Some open-source tools like CloudCustodian, Prowler, or ScoutSuite can help with that, the difficulty being to be able to run them in multiple accounts and regions.

Another challenge is the duplication of security checks between static Terraform code analysis and runtime checks. Say we want to identify public S3 buckets — we shouldn’t have to write two rules for this, one for static analysis and one for the runtime scan! Ideally, we would like to write only one rule and use it in both types of scans.

Unfortunately, I’m not aware of any open-source tools with this capability, nor am I sure that any commercial platform supports it. Several vendors focus on runtime scanning and provide their own Terraform static analysis solution, but without the possibility to reuse checks. This is the case of Aqua Wave, Accurics, or BridgeCrew. Fugue uses the same language (Rego) for its commercial platform as for Regula, but there are still some differences that make it challenging to reuse (happy to elaborate if that’s of interest to anyone).

Palo Alto’s Prisma Cloud supports both runtime and static Terraform code analysis, but an industry peer confirmed they use different scan engines and pipelines as well. Whoops, did I just say something negative about a Palo Alto product? Hopefully they won’t sue me for that.

More generic projects like Open Policy Agent could help achieve this, but it seems cumbersome without a proper wrapper. Another approach might be to deploy Terraform resources against a mock of the AWS API and then use runtime checks as if it were the real one. Although it might be a viable solution, I am concerned that it would make Terraform code scans noticeably slower, since it is no longer a static analysis.

Conclusion

There are quite a few projects out there for Terraform static code analysis, hopefully this post provided some guidance and information about the current landscape. An interesting talk that I recommend on the topic is Shifting Terraform Configuration Security Left by Gareth Rushgrove @ Snyk.

Let’s continue the discussion on Twitter! Especially, I’m highly interested in hearing about any project, product, or approach that allows the reuse of checks between static and runtime scanning, as well as your own experience with Terraform static analysis tools.

Finally, a big thank you to Clint Gibler at tl;dr sec for helping make this blog post better. Take a look at his newsletter and talks, especially “How to 10x Your Security” which I highly enjoyed. Thank you also to noobintheshell for the review.

And thank you for reading!

Post Views: 219